Log processing

- Latest Dynatrace

- Explanation

- 4-min read

Dynatrace can reshape incoming log data for better understanding, analysis, or further transformation.

Information can be logged in a very wide variety of formats depending on the application, process, operating system, or other factors. Log pre-processing and processing with OpenPipeline offers a central and flexible way of extracting value from those raw log lines.

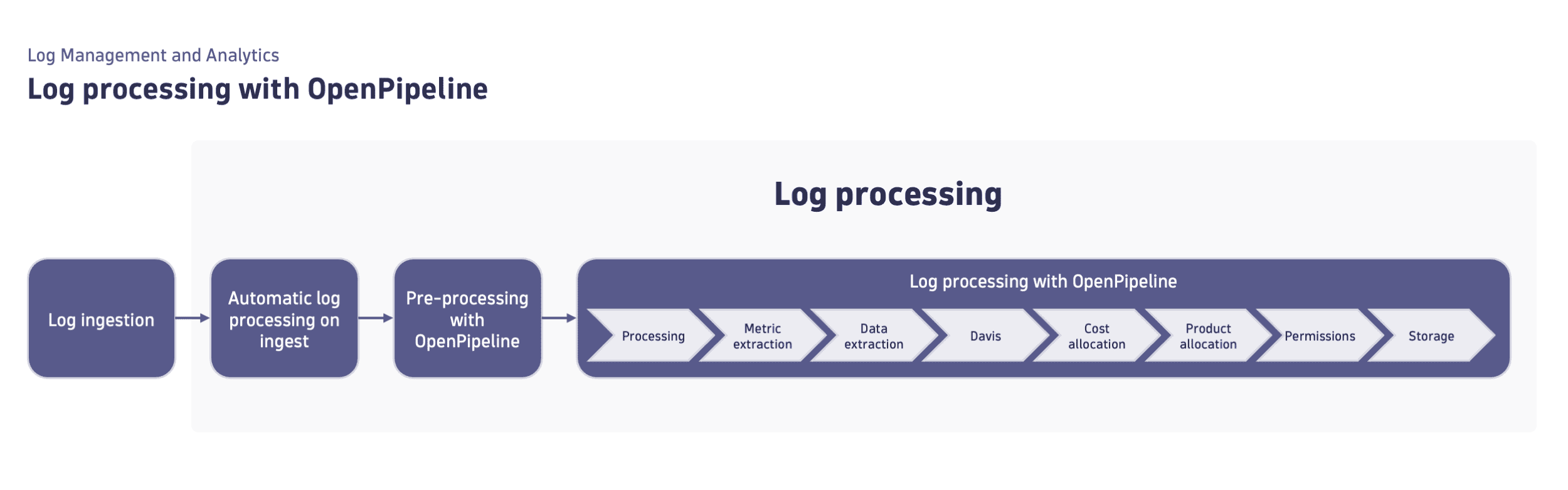

Log processing comprises the following steps.

1. Automatic log processing on ingest

Dynatrace processes logs upon ingestion to ensure that your log lines are ready for automation, troubleshooting, and analysis. This unified approach allows you to switch between different log ingest strategies with zero or minimum configuration.

Automatic log processing on ingest includes timestamp extraction, severity extraction, and log payload parsing.

For more details, see Automatic log processing at ingestion.

2. Pre-processing with OpenPipeline

Dynatrace applies log pre-processing to enrich, normalize, and prepare log data for analysis. Thanks to this structured approach, logs from supported technologies are enriched without manual configuration, have better structure and metadata, and are connected with their traces.

Pre-processing with OpenPipeline ensures consistent log structure, improved queryability, and seamless integration with Dynatrace observability features.

For more information, see Log pre-processing with OpenPipeline with ready-made bundles.

3. Log processing with OpenPipeline

Log processing with OpenPipeline involves using the OpenPipeline solution to handle logs before they are stored in Grail. This step includes different stages, such as processing, metric and data extraction, permissions, and storage. See Log processing with OpenPipeline for the detailed overview of all the stages.

We recommend utilizing log processing with OpenPipeline as a scalable, powerful solution to manage, process, and analyze logs. If you don't have access to OpenPipeline, use the classic log processing pipeline.