Detect threats against your AWS Secrets with Security Investigator

Latest Dynatrace

In today's digital landscape, protecting your cryptographic secrets in a cloud environment is more critical than ever. Secrets such as API keys, passwords, and encryption keys used in your applications are vital parts of your applications that, when leaked, could jeopardize your whole business. That's why analyzing threats against secrets is essential to ensure your data's integrity, confidentiality, and availability.

In the following, you'll learn how Security Investigator  can help you

can help you

Target audience

This article is intended for security engineers and site reliability engineers who are involved in the maintenance and security of cloud applications in AWS.

Prerequisites

-

Store your CloudTrail logs to an S3 bucket or CloudWatch.

-

Send CloudTrail logs to Dynatrace. There are two options to stream logs:

-

Amazon S3 recommended

-

-

Knowledge of

Before you begin

Follow the steps below to fetch the AWS CloudTrail logs from Grail using Security Investigator and prepare them for analysis.

Fetch AWS CloudTrail logs from Grail

Fetch AWS CloudTrail logs from Grail

Once your CloudTrail logs are ingested into Dynatrace, follow these steps to fetch the logs.

-

Open Security Investigator

.

. -

Select

Case to create an investigation scenario.

-

In the query input section, insert the DQL query below.

fetch logs, from: -30min| filter aws.service == "cloudtrail" -

Select

Run to display results.

The query will search for logs from the last 30 minutes, which have been forwarded from an AWS log group that contains the word

cloudtrail.If you know in which Grail bucket the CloudTrail logs are stored, use filters to specify the bucket to improve the query performance.

fetch logs, from: -30min| filter dt.system.bucket == "my_aws_bucket"| filter aws.service == "cloudtrail"For details, see DQL Best practices.

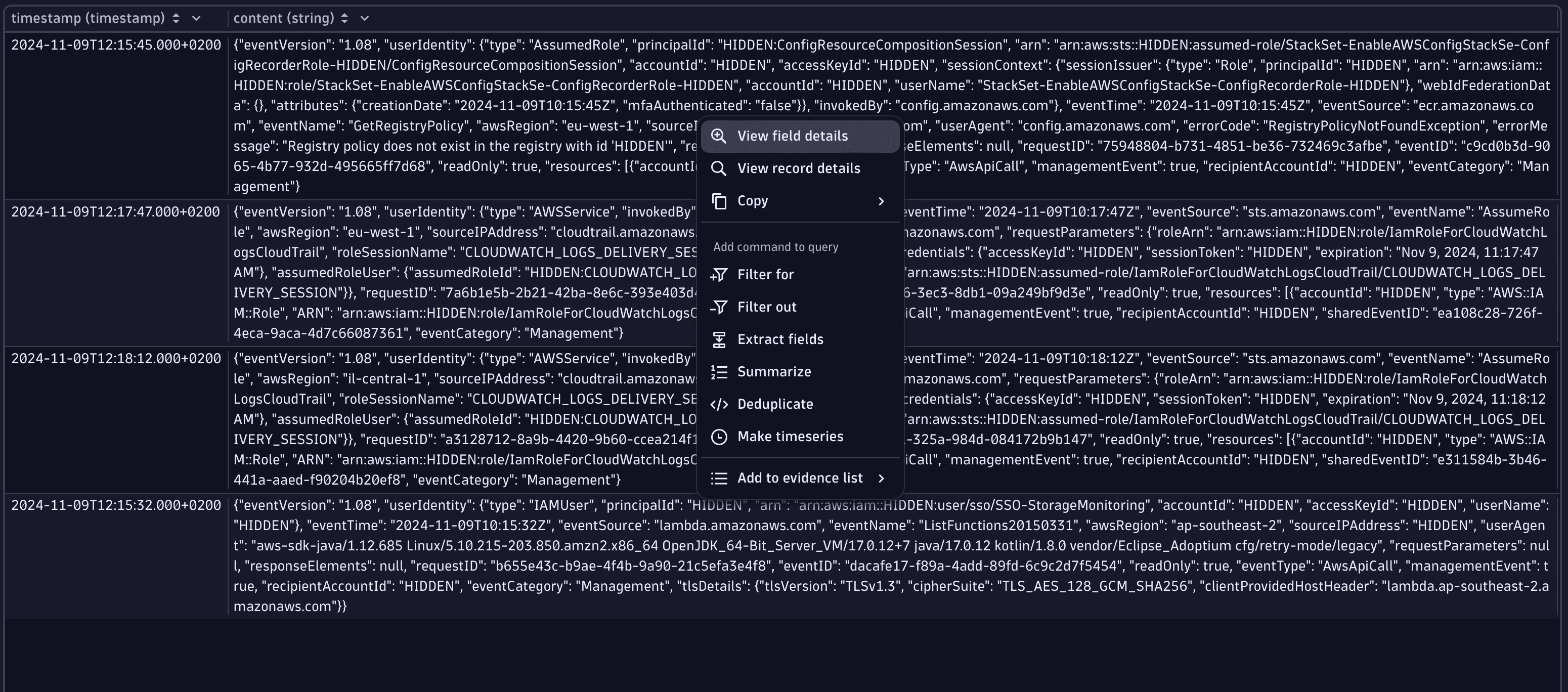

The results table will be populated with the JSON-formatted events.

-

Right-click on an event and select View field details to see the JSON-formatted event in a structured way. This enables investigators to grasp the content of the event much faster.

-

Navigate between events in the results table via the keyboard arrow keys or the navigation buttons in the upper part of the View field details window.

Prepare data for analysis

Prepare data for analysis

Follow the steps below to simplify log analysis, speed up investigations, and maintain the required precision for analytical tasks.

-

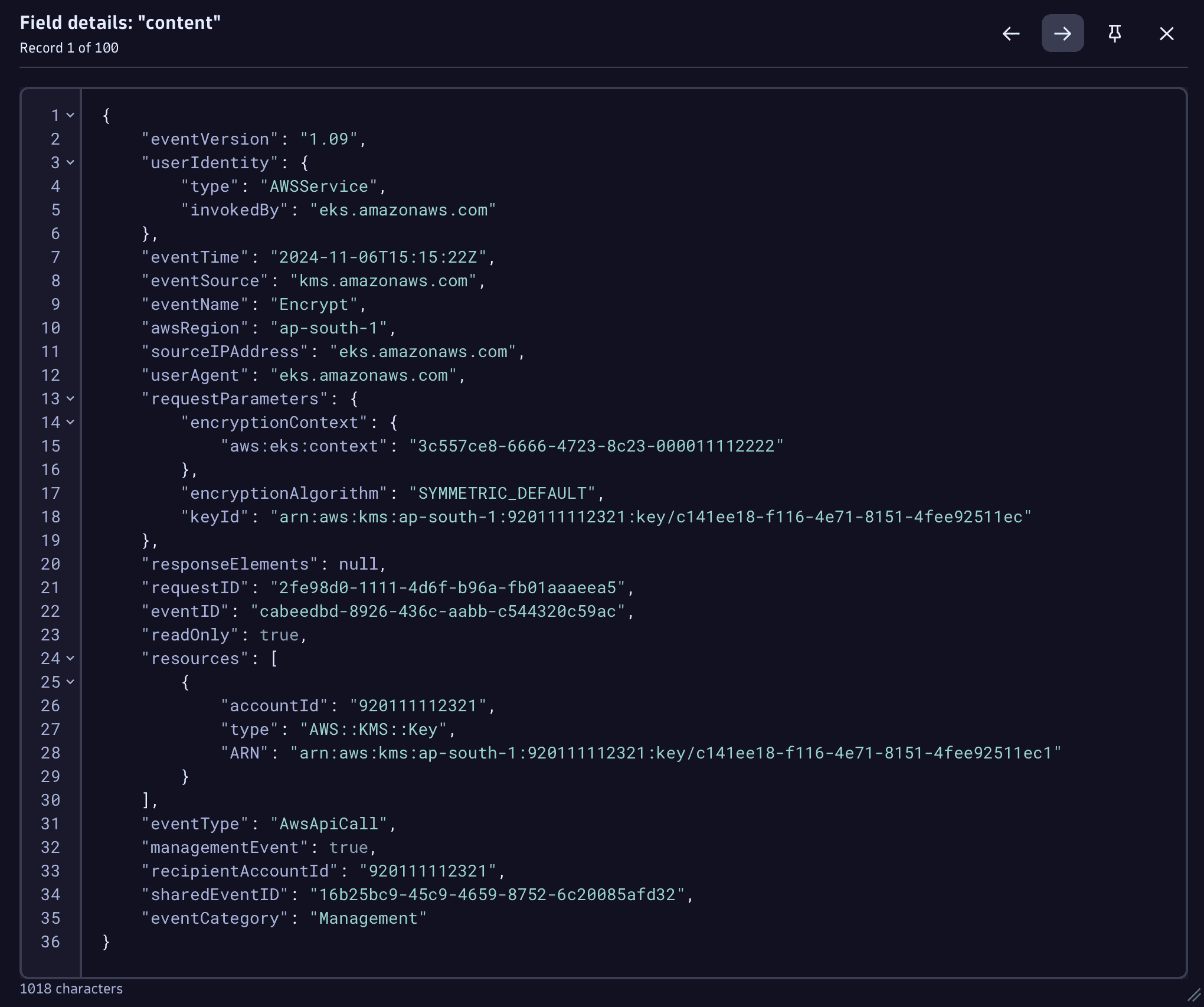

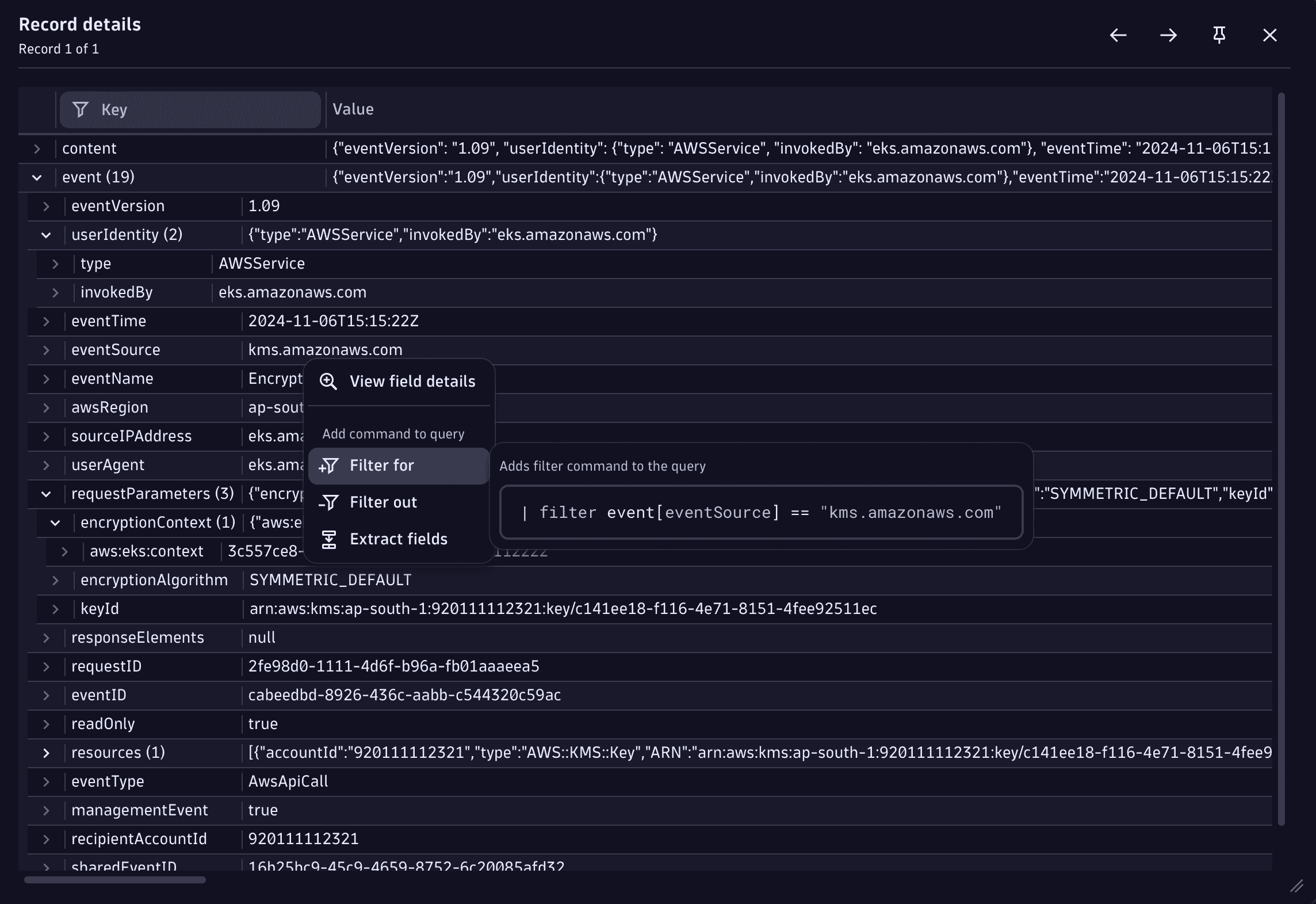

Add to your DQL query the parse command to extract the required data from the log records into separate fields.

-

Add the JSON matcher to extract the JSON-formatted log content as a JSON object into a separate field called

event.Your DQL query should look like this:

fetch logs, from: -30min| filter dt.system.bucket == "my_aws_bucket"| filter aws.service == "cloudtrail"| parse content, "JSON:event" -

Double-click on any record in the results table to view the object in the Record details view. Expand the JSON elements to navigate through the object faster and add filters based on its content.

Get started

The following are use cases demonstrating how to build the above query to analyze AWS Secrets with Dynatrace.

Detect externally generated keys in AWS KMS

Detect unprivileged requests trying to read Secrets

Detect requesting non-existing secrets

Detect externally generated keys in AWS KMS

When creating a new key in AWS Key Management Service (KMS), you can choose the key material origin for the key: whether the keys are kept under AWS control or handled externally.

By default, key origin material is AWS_KMS, which means that KMS creates the key material.

When keys are handled externally, there’s an increased risk that the keys might leak, thus endangering the data that is protected with the key: the key could leak from elsewhere and its location could be unknown.

To detect key creations where external key material was used

-

Create a filter to fetch only events with the name

CreateKey. -

Add a statement to the filter to exclude all origins that begin with

AWS_.Currently, there are two options (

AWS_KMSandEXTERNAL), so you could filter byExternalorigin, but having the filtering out could be be more future-proof.Your DQL query should look like this:

fetch logs, from: -30min| filter dt.system.bucket == "my_aws_bucket"| filter aws.service == "cloudtrail"| parse content, "json:event"| filter event[eventName] == "CreateKey"| filterOut startsWith(event[requestParameters][origin], "AWS_")| fields {eventName = event[eventName],origin = event[requestParameters][origin],keyUsage = event[responseElements][keyMetadata][keyUsage],region = event[awsRegion],userARN = event[userIdentity][arn],keyId = event[responseElements][keyMetadata][keyId]}

As a result, you get a table that contains the following information:

Detect unprivileged requests trying to read Secrets

Unauthorized requests to read secrets is an indication of either a hacking attempt or a system misconfiguration. Unauthorized requests might mean that an attacker has compromised credentials from your system and is now trying to extract Secrets from your AWS account (but luckily without success).

In this use-case, we go through two scenarios that target different unprivileged access attempts to your secrets: requesting secrets without KMS privileges and requesting unauthorized secrets.

Requests without KMS privileges

In case of missing KMS privileges, you can assume these accounts were not supposed to access any secrets in your environments. If this still happens, this is (either malicious or accidental) credential misuse or misconfiguration. Either way, this requires your attention.

To see if someone is trying to access such events in your CloudTrail logs

-

Create a filter to fetch only

GetSecretValueevents and with anAccessDeniederror code. -

Add a new filtering condition to see only errors with an

Access to KMS is not allowedmessage. -

Aggregate the results by

sourceIPAddress,awsRegion, and theARNof the user of the unauthorized attempts.Your DQL query should look like this:

fetch logs, from: -30min| filter dt.system.bucket == "my_aws_bucket"| filter aws.service == "cloudtrail"| parse content, "json:event"| filter event[eventName] == "GetSecretValue"and event[errorCode] == "AccessDenied"and event[errorMessage] == "Access to KMS is not allowed"| summarize event_count = count(), by: {sourceIPAddress = event[sourceIPAddress],awsRegion = event[awsRegion],userARN = event[userIdentity][arn]}

If your query returns any results, they would look like this:

Unauthorized Secret requests

In this case, the account is trying to load privileges to which it doesn’t have access. The secret configuration might be incorrect, or the account might be being used for secrets it wasn’t meant to be used for. The last possibility introduces a potential security threat if the intent is malicious.

To see if such events occur in your CloudTrail logs

-

Create a filter to fetch only

GetSecretValueevents and with anAccessDeniederror code. -

If the requested secret doesn't exist or the user doesn't have access to it, the secret's ARN is mentioned in the error message. Parse out the

secretIDfrom the error message. -

Show only the events where

secretIDis present. -

Aggregate the results by

sourceIPAddress,awsRegion, and theuserARNof the user of the unauthorized attempts.Your DQL query should look like this:

fetch logs, from: -30min| filter dt.system.bucket == "my_aws_bucket"| filter aws.service == "cloudtrail"| parse content, "json:event"| filter event[eventName] == "GetSecretValue"and event[errorCode] == "AccessDenied"| parse event[errorMessage], "LD ':secret:' STRING:secretId"| filter isNotNull(secretId)| summarize count(), by: {sourceIPAddress = event[sourceIPAddress],awsRegion = event[awsRegion],userARN = event[userIdentity][arn],secretId}

If your query returns any results, they would look like this:

Detect requesting non-existing secrets

Requesting secrets that don't exist might indicate a security issue (for example, when someone is trying to enumerate your secrets to extract them and tries all kinds of secrets that might not exist) or an operational issue (secrets used by the service are no longer available, thus creating service issues).

To see if such events are present in your CloudTrail logs

-

Create a filter to fetch the

GetSecretValueevents. -

Append the filter conditions to fetch only events with a

ResourceNotFoundExceptionerror message. -

Aggregate the results by

sourceIPAddress,awsRegion, and theuserARNand collect the number of events and distinct secrets fetched by this user in the respective AWS region from the specific IP address.If you see a large number of distinct secrets being fetched from a single

userARN, it might be a secret enumeration. If the number of different secrets is low, something has probably happened to the secret (a wrong set of privileges, the secret has been removed, or similar).Your DQL query should look like this:

fetch logs, from: -30min| filter dt.system.bucket == "my_aws_bucket"| filter aws.service == "cloudtrail"| parse content, "json:event"| filter event[eventName] == "GetSecretValue"and event[errorCode] == "ResourceNotFoundException"| summarize {event_count = count(),distinct_secrets = countDistinct(event[requestParameters][secretId])}, by: {sourceIPAddress = event[sourceIPAddress],awsRegion = event[awsRegion],userARN = event[userIdentity][arn]}| sort distinct_secrets desc