AI in Workflows - Predictive Maintenance of Cloud Disks

- Latest Dynatrace

- Tutorial

- 6-min read

Davis® analyzers offer a broad range of general-purpose artificial intelligence and machine learning (AI/ML) functionality, such as learning and predicting time series, detecting anomalies, or identifying metric behavior changes within time series. Davis for Workflows enables you to seamlessly integrate those analyzers into your custom workflows. An example use case is a fully automated task of predicting and remediating future capacity demands. It helps you to avoid critical outages by being notified days in advance before incidents even arise.

Install Davis for Workflows

To use Davis® for Workflow actions, you first need to install Davis® for Workflows from Dynatrace Hub.

- In Dynatrace Hub

, search for Davis® for workflows.

, search for Davis® for workflows. - Select Davis® for Workflows and select Install.

After installation, Davis actions appear automatically in the Chose action section of Workflows.

Example use case

This use case shows how you can leverage the Davis forecast analyzer to predict future disk capacity needs and raise predictive alerts weeks before critical incidents occur.

Grant necessary permissions

Explore capacity measurements

Define a trigger schedule

Configure the forecast

Evaluate the result

Remediate before it happens

Review raised problems

Grant necessary permissions

Grant necessary permissions

A successful Davis analysis requires proper access rights.

- In Workflows, go to Settings > Authorization settings.

- Grant the following primary permission.

app-engine:functions:run

- Grant the following secondary permissions.

davis:analyzers:readdavis:analyzers:executestorage:bizevents:readstorage:buckets:readstorage:events:readstorage:logs:readstorage:metrics:readstorage:spans:readstorage:system:read

- In the top right, select Save.

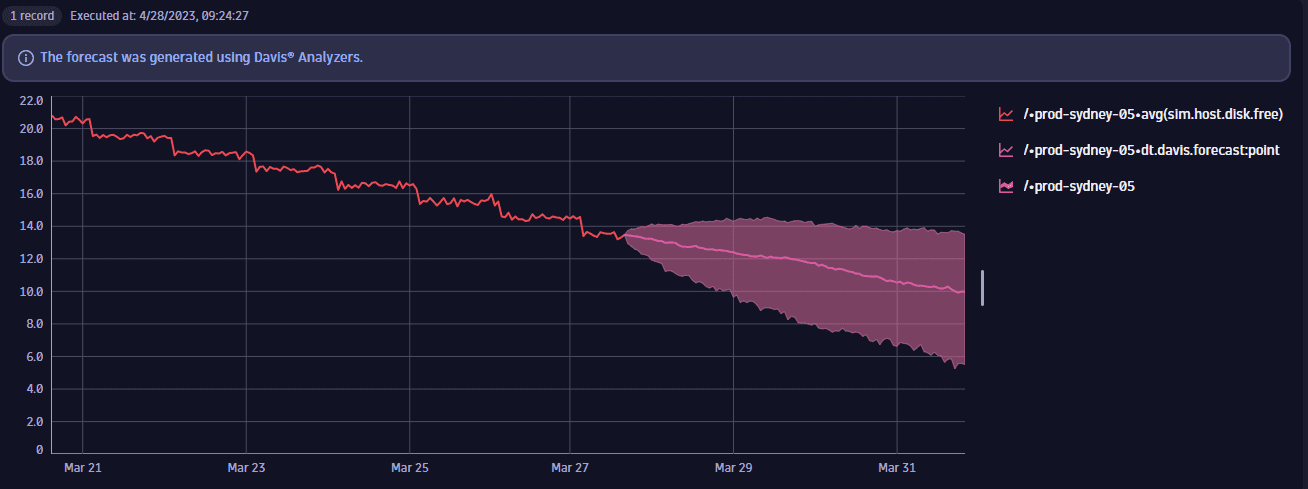

Explore capacity measurements in a notebook

Explore capacity measurements in a notebook

Predictive capacity management starts within Notebooks where you need to configure your capacity indicators. The image below shows an example of the free disk percentage indicator for an operations team.

Once you have the required indicators, it's time to build the workflow that triggers a forecast at regular intervals.

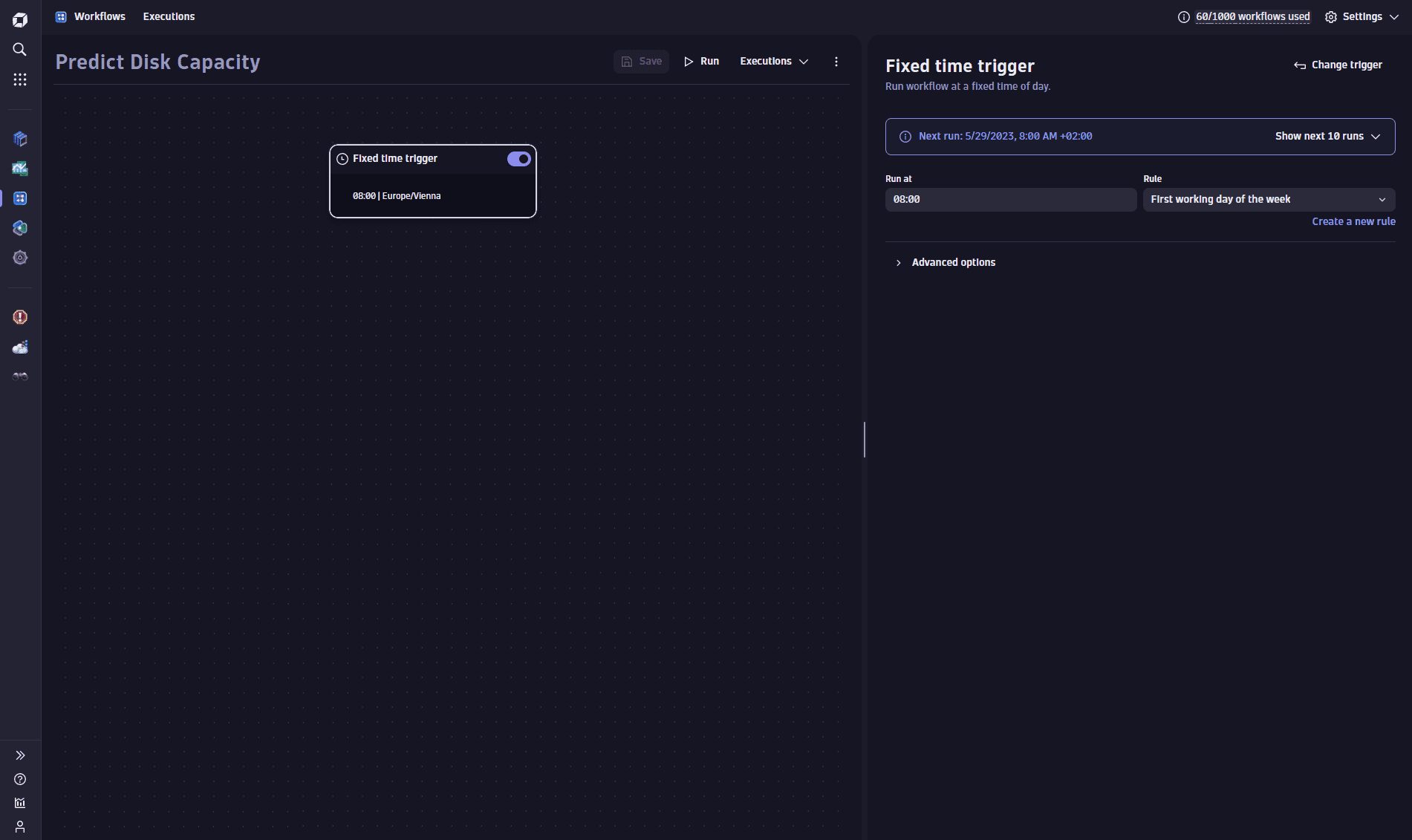

Define a trigger schedule

Define a trigger schedule

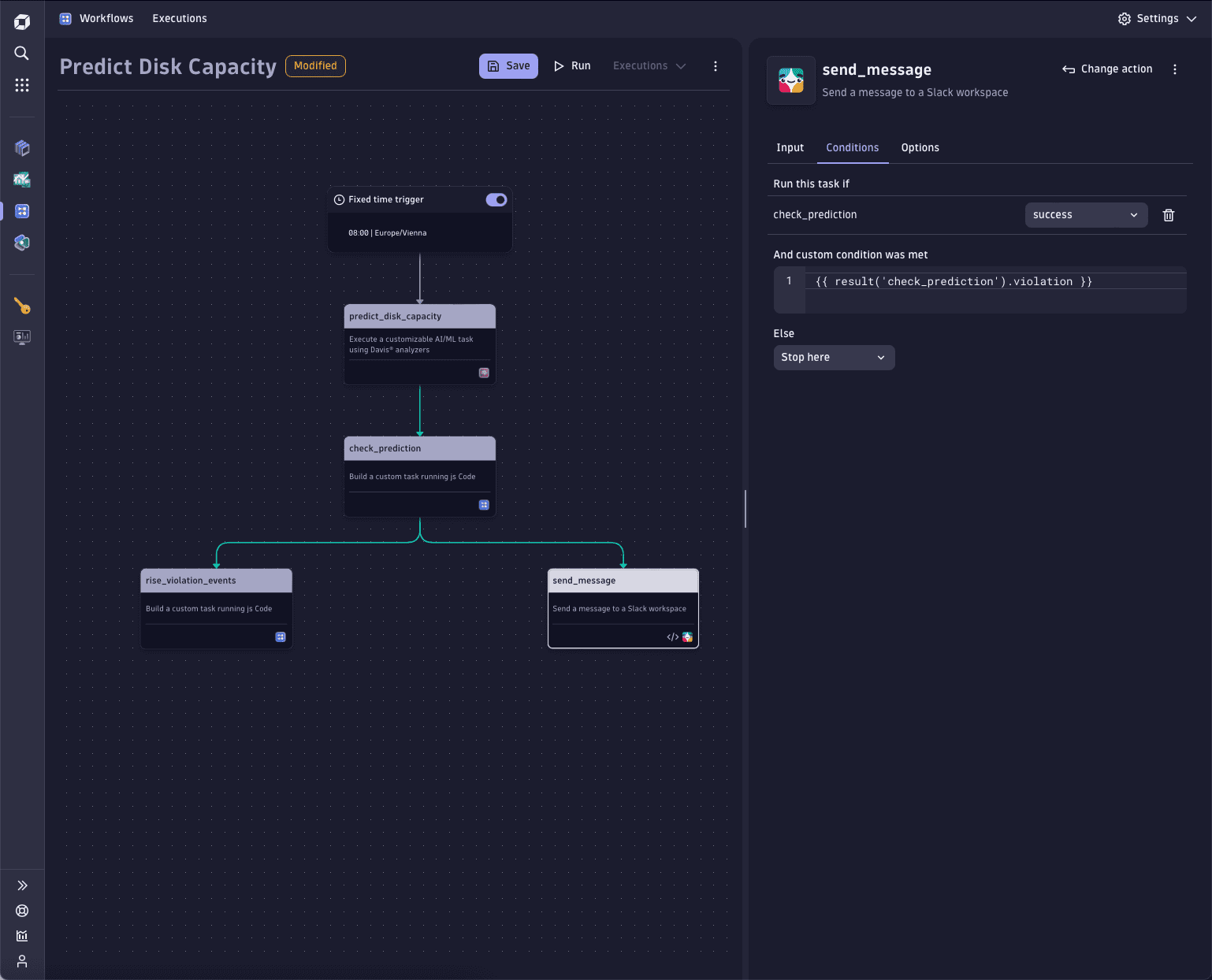

In Workflows, configure the required schedule to trigger the forecast. To learn how, see Workflow schedule trigger. The image below shows the workflow that runs at 8:00 AM to trigger the forecast of all the disks that are likely to run out of space in the next week.

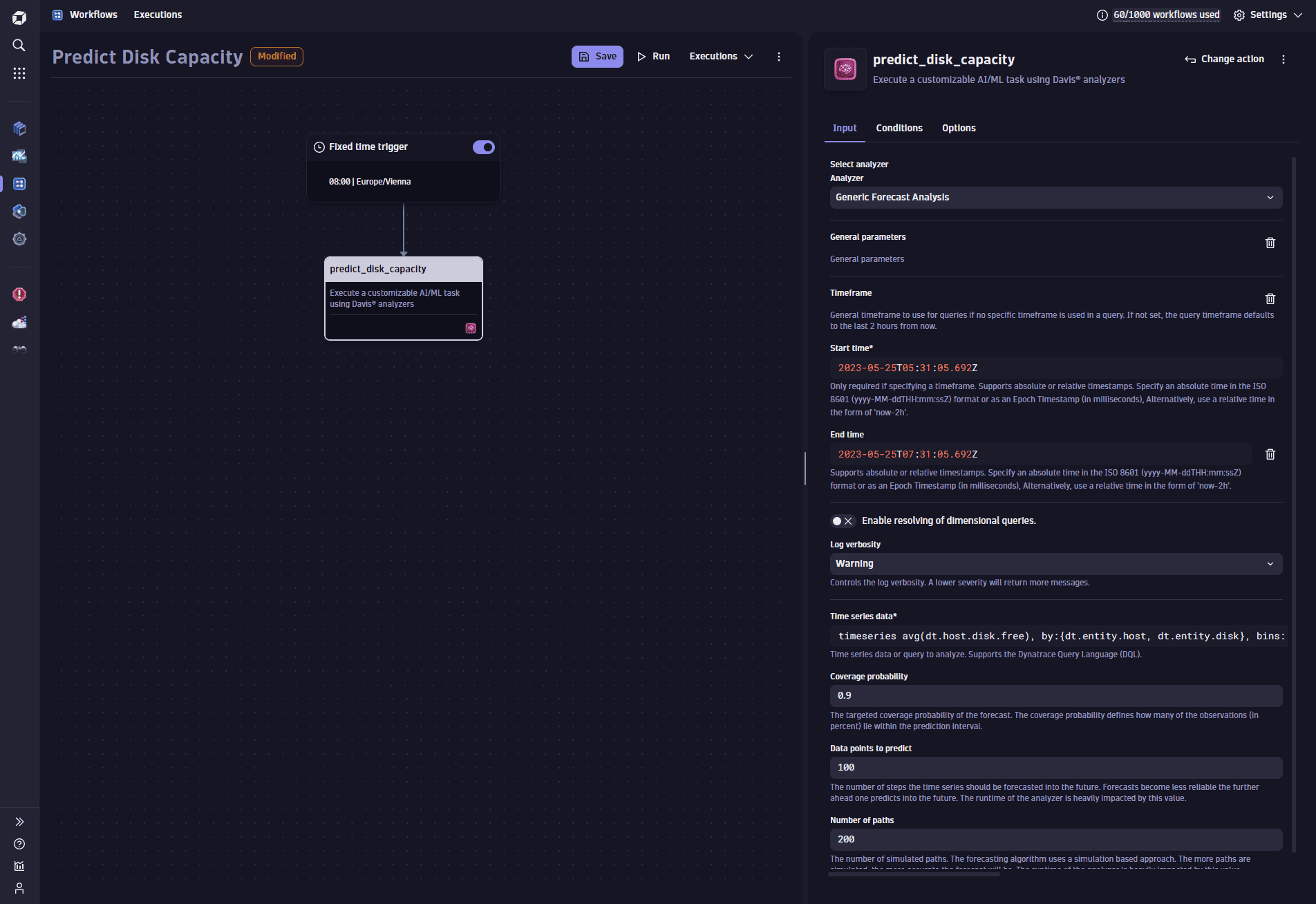

Configure the forecast

Configure the forecast

To trigger the forecast from a workflow, you need the Analyze with Davis action. The action uses the forecast analysis and a data set for the forecast. You can use any time series data for the forecast. All you need is to fetch it from Grail via a DQL query. Here, we define a set of disks for which we want to predict capacity. We use the dt.host.disk.free metric, but you can use any capacity metric—host CPU, memory, network load. You can even extract the value from a log line.

Our forecast is trained on a relative timeframe of the last seven days, specified in the DQL query. It predicts 100 data points; that is, the original 120 points fetched from Grail are expanded by predicted 100 data points, spanning approximately one week into the future. See the DQL query below.

The action returns all the forecasted time series, which could be hundreds or thousands of individual disk predictions.

To configure this forecast in the action

- Add a new Analyze with Davis action.

- Set the name of the action as

predict_disk_capacity. - Select the Generic Forecast Analysis as an analyzer.

- In Time series data, specify the following DQL query:

timeseries avg(dt.host.disk.free), by:{dt.entity.host, dt.entity.disk}, bins: 120, from:now()-7d, to:now()

- Set Data points to predict as

100.

Evaluate the result

Evaluate the result

The next workflow action tests each prediction to determine whether the disk will run out of space during the next week. It's a Run JavaScript action, running the custom TypeScript code, checking threshold violations, and passing all violations to the next action. It returns a custom object with a boolean flag (violation) and an array containing violation details (violations).

- Add a new Run JavaScript action.

- Set the name of the action as

check_prediction. - Use the following source code.

import { execution } from '@dynatrace-sdk/automation-utils';const THRESHOLD = 15;const TASK_ID = 'predict_disk_capacity';export default async function ({ executionId }) {const exe = await execution(executionId);const predResult = await exe.result(TASK_ID);const result = predResult['result'];const predictionSummary = { violation: false, violations: new Array<Record<string, string>>() };console.log("Total number of predicted lines: " + result.output.length);// Check if prediction was successful.if (result && result.executionStatus == 'COMPLETED') {console.log('Prediction was successful.')// Check each predicted result, if it violates the threshold.for (let i = 0; i < result.output.length; i++) {const prediction = result.output[i];// Check if the prediction result is considered validif (prediction.analysisStatus == 'OK' && prediction.forecastQualityAssessment == 'VALID') {const lowerPredictions = prediction.timeSeriesDataWithPredictions.records[0]['dt.davis.forecast:lower'];const lastValue = lowerPredictions[lowerPredictions.length-1];// check against the thresholdif (lastValue < THRESHOLD) {predictionSummary.violation = true;// we need to remember all metric properties in the result,// to inform the next actions which disk ran out of spacepredictionSummary.violations.push(prediction.timeSeriesDataWithPredictions.records[0]);}}}console.log(predictionSummary.violations.length == 0 ? 'No violations found :)' : '' + predictionSummary.violations.length + ' capacity shortages were found!')return predictionSummary;} else {console.log('Prediction run failed!');}}

Remediate before it happens

Remediate before it happens

You have a variety of remediation actions to follow up on predicted capacity shortages. In our example, the workflow raises a Davis problem and sends a Slack message for each potential shortage. Both are conditional actions that only trigger if the forecast predicts any disk space shortages.

Each raised Davis problem carries custom properties that provide insight into the situation and help to identify the problematic disk.

To send a message

- Add a new Send message action.

- Set the name of the action as

send_message. - Configure the message. To learn how, see Slack Connector.

- Open the Conditions tab.

- Select the

successcondition for the check_prediction action. - Add the following custom condition:

{{ result('check_prediction').violation }}

To raise a Davis problem

-

Add a new Run JavaScript action.

-

Set the name of the action as

raise_violation_events. -

Use the following source code.

import { eventsClient, EventIngestEventType } from "@dynatrace-sdk/client-classic-environment-v2";import { execution } from '@dynatrace-sdk/automation-utils';export default async function ({ executionId }) {const exe = await execution(executionId);const checkResult = await exe.result('check_prediction');const violations = await checkResult.violations;// Raise an event for each violationviolations.forEach(function (violation) {eventsClient.createEvent({body : {eventType: EventIngestEventType.ResourceContentionEvent,title: 'Predicted Disk Capacity Alarm',entitySelector: 'type(DISK),entityId("' + violation['dt.entity.disk'] + '")',properties: {'dt.entity.host' : violation['dt.entity.host']}}});});}; -

Open the Conditions tab.

-

Select the

successcondition for the check_prediction action. -

Add the following custom condition.

{{ result('check_prediction').violation }}

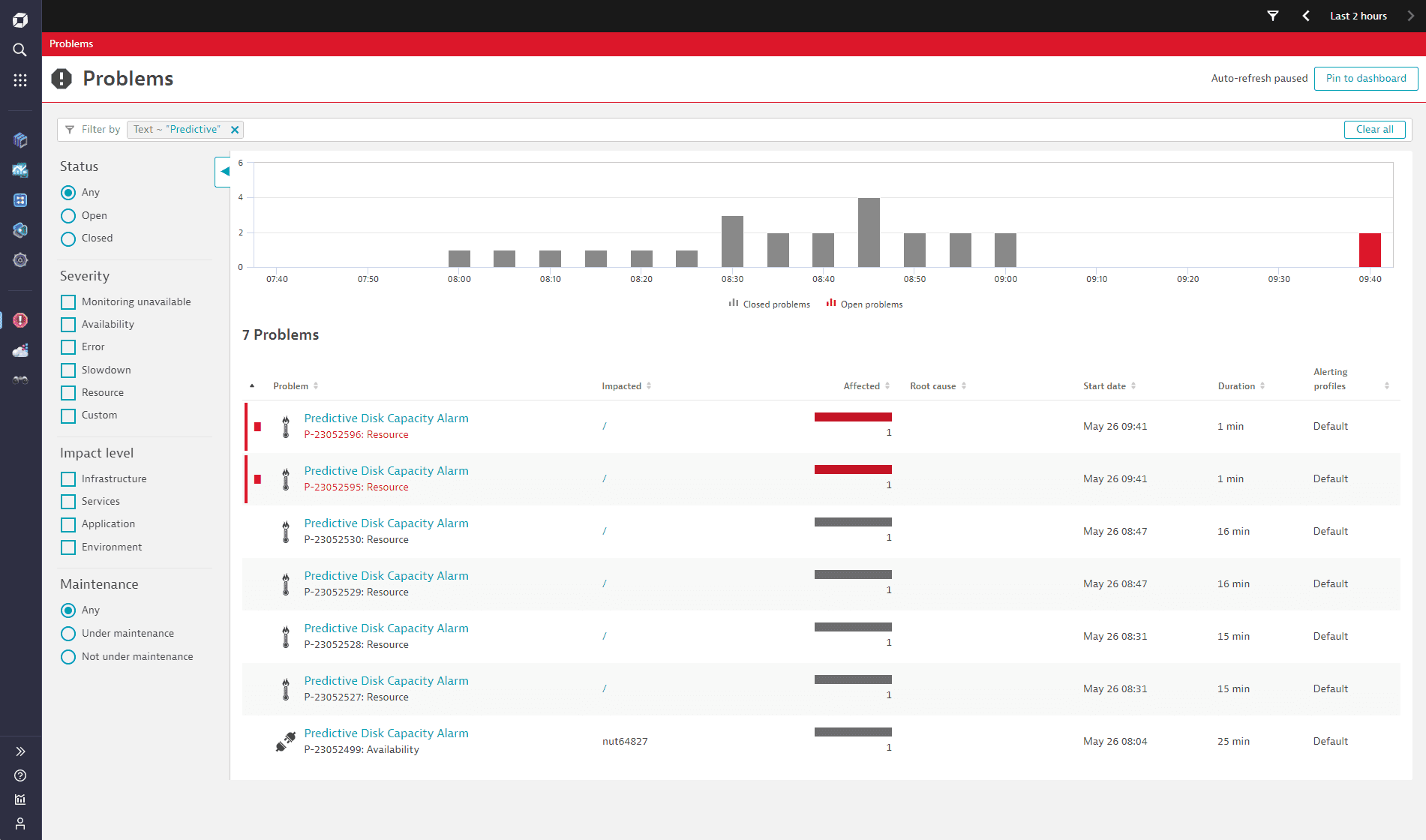

Review all Davis predicted capacity problems

Review all Davis predicted capacity problems

In Dynatrace, the operations team can review all predicted capacity shortages in the Davis problems feed.

Raising a problem is an optional remediation step that you can skip completely, opting for notifications for responsible teams. In this example it illustrates the flexibility and power of the AutomationEngine combined with the analytical capabilities of Davis AI and Grail.