Examples of anomaly detection on Grail

- Latest Dynatrace

- Tutorial

- 3-min read

- Published May 14, 2024

These examples show how to use DQL to transform data from Grail into time series that can serve as input for anomaly detection analyzers.

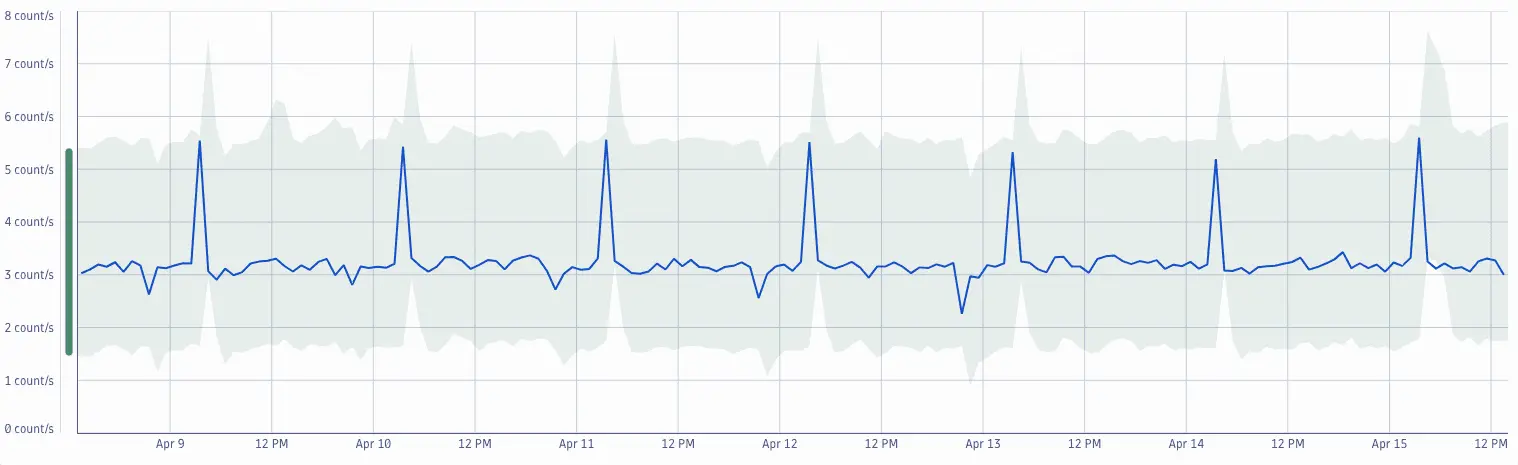

Detect anomalies in seasonal time series

Suppose you want to use anomaly detection to receive alerts on abnormal network load.

An auto-adaptive or static threshold will do the job when your normal load is homogenous, but what if there's seasonality in the normal behavior?

- A static threshold would generate false positives on spikes.

- A dynamic threshold might miss an anomaly in the lower end of the wave because it adapted to the spike.

This is where a seasonal baseline comes in handy, as it adapts the baseline according to the seasonality of your data.

In this example, Davis built a baseline for the average network load with a seasonal pattern. We used this DQL query to obtain the data:

timeseries avg(dt.process.network.load)

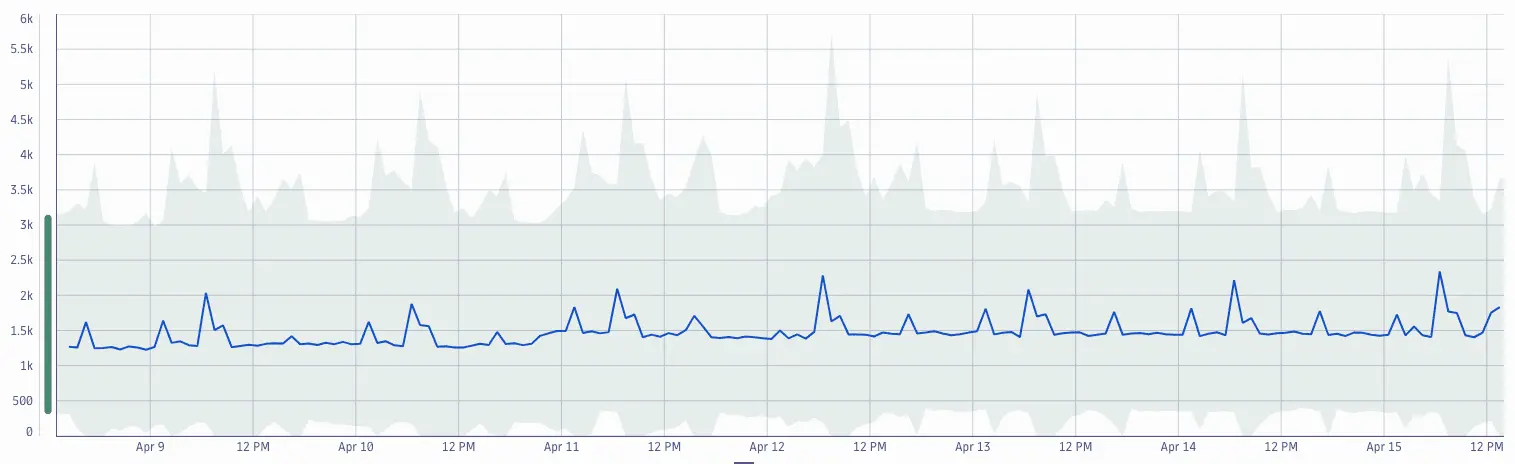

Identify anomalies in number of events or problems

Sometimes it's important to understand if the number of detected events or problems is aligned with our expectations. DQL allows you to transform any set measurements into a time series that you can use as input for anomaly detection.

In this example, DQL creates a time series from the count of events and feeds it into the anomaly detection analyzer.

fetch events| filter event.kind == "DAVIS_EVENT"| makeTimeseries count(), time:{timestamp}

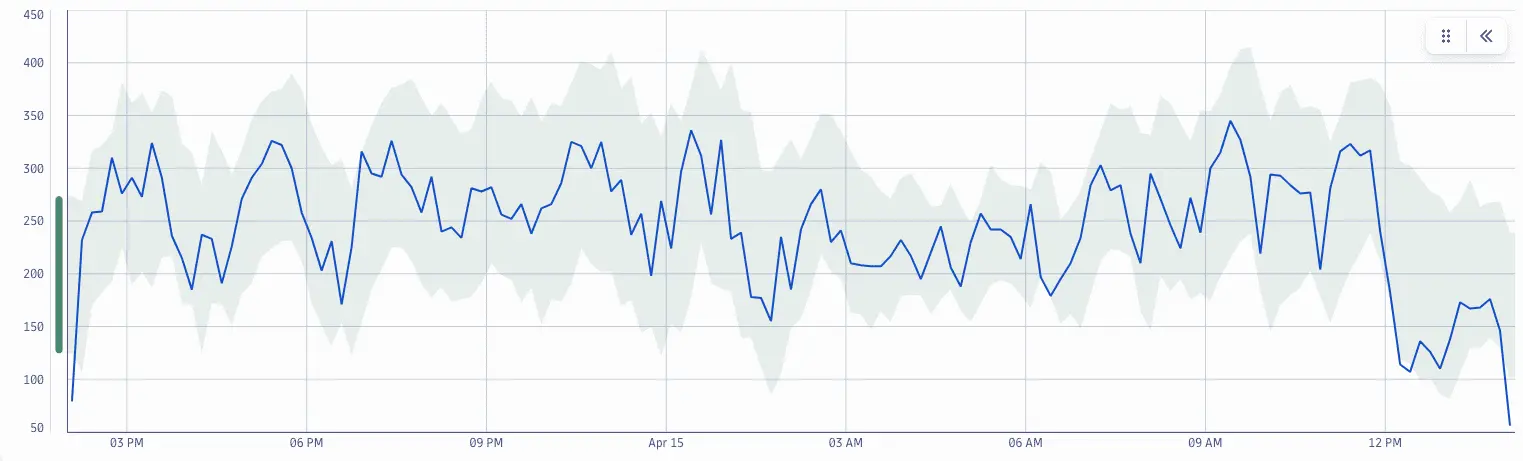

Detect anomalies within a log pattern

Logs that your systems produce contain valuable information, for example, critical crash information or the count of failed login attempts. With logs stored in Grail, DQL allows you to extract records into time series by pattern.

In this example, DQL creates a time series from a count of No journey found log patter occurrences and feeds it into the anomaly detection analyzer.

fetch logs| filter contains(content, "No journey found")| makeTimeseries count(), time:{timestamp}

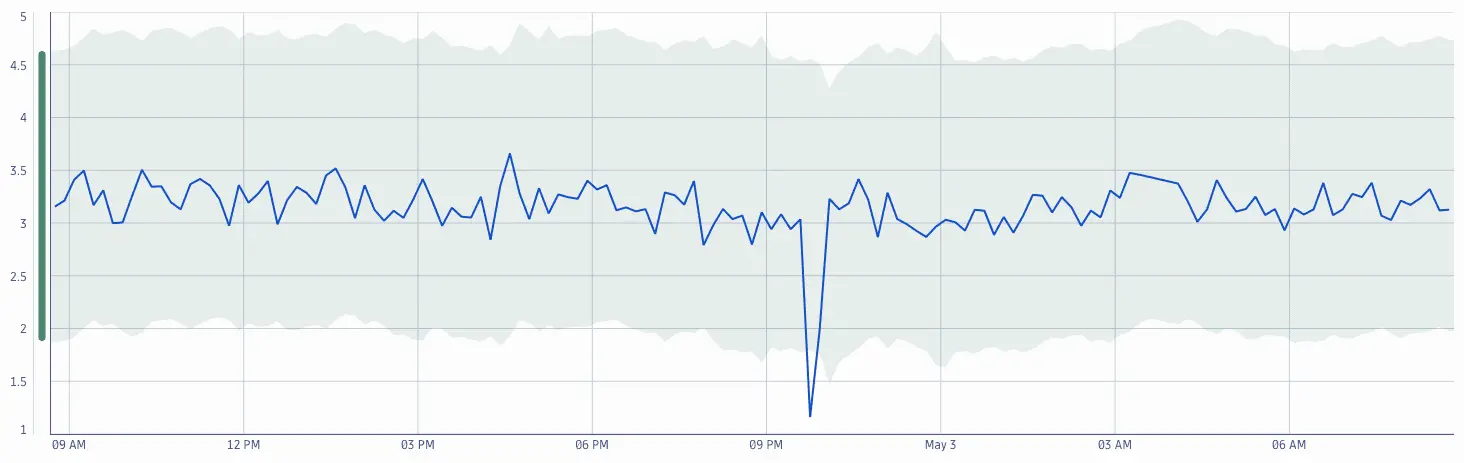

Remove extreme outliers from training data

Davis automatically removes outliers from training data to ensure a stable run of the algorithm. Large segments of outliers, however, cannot to be removed automatically. For data sets with significant outliers, DQL allows you to sanitize the data before feeding it into the anomaly detection analyzer.

timeseries load = avg(dt.process.network.load)| fieldsAdd load_cleaned = iCollectArray(if(load[] < 4, load[]))| fieldsRemove load

Anomaly Detection

Anomaly Detection