Built-in classic metrics

- Reference

- 3-min read

- Published Sep 03, 2020

Each Dynatrace-supported technology offers multiple "built-in" metrics. Built-in metrics are included in the product out of the box, in some cases as part of built-in extensions.

Metrics that are based on OneAgent or ActiveGate extensions (prefix ext:) and calculated metrics (prefix calc:) are custom metrics, not built-in metrics; DDU consumption for these metrics can vary widely depending on how you use Dynatrace.

The ext: prefix is used by metrics from OneAgent extensions and ActiveGate extensions, and also by classic metrics for AWS integration.

Despite the naming similarities, AWS integration metrics are not based on extensions.

To view all the metrics available in your environment, use the GET metrics API call. We recommend the following query parameters:

pageSize=500—to obtain the largest possible number of metrics in one response.fields=displayName,unit,aggregationTypes,dduBillable—to obtain the same set of fields as you see in these tables.- Depending on which metrics you want to query, one of the following values for the metricSelector parameter:

metricSelector=ext:*—to obtain all metrics coming from extensions.metricSelector=calc:*—to obtain all calculated metrics.- Omit the parameter to obtain all the metrics of your environment.

The sections below describe inconsistencies or limitations identified for Dynatrace built-in metrics.

Application and billing metrics for mobile and custom apps

The Other applications metrics section contains metrics captured for mobile and custom applications. These metrics, which start with builtin:apps.other, are captured without the indication whether it's a mobile or a custom application. However, the "billing" application metrics, which start with builtin:billing.apps, are split for these application types:

-

Mobile apps:

builtin:billing.apps.mobile.sessionsWithoutReplayByApplicationbuiltin:billing.apps.mobile.sessionsWithReplayByApplicationbuiltin:billing.apps.mobile.userActionPropertiesByMobileApplication

-

Custom apps:

builtin:billing.apps.custom.sessionsWithoutReplayByApplicationbuiltin:billing.apps.custom.userActionPropertiesByDeviceApplication

Billing metrics count both billed and unbilled sessions

The following "billing" metrics for session count are actually the sum of billed and unbilled user sessions.

builtin:billing.apps.custom.sessionsWithoutReplayByApplicationbuiltin:billing.apps.mobile.sessionsWithReplayByApplicationbuiltin:billing.apps.mobile.sessionsWithoutReplayByApplicationbuiltin:billing.apps.web.sessionsWithReplayByApplicationbuiltin:billing.apps.web.sessionsWithoutReplayByApplication

If you want to get only the number of billed sessions, set the Type filter to Billed.

Different measurement units for request duration metrics

Different measurement units are used for similar request duration metrics for mobile and custom apps.

builtin:apps.other.keyUserActions.requestDuration.os is measured in microseconds while other request duration metrics (builtin:apps.other.requestTimes.osAndVersion and builtin:apps.other.requestTimes.osAndProvider) are measured in milliseconds.

Custom vs built-in metrics

Custom metrics are defined or installed by the user, while built-in metrics are by default part of the product. Certain built-in metrics are disabled by default and, if turned on, will consume DDUs. These metrics cover a wide range of supported technologies, including Apache Tomcat, NGINX, Couchbase, RabbitMQ, Cassandra, Jetty, and many others.

A custom metric is a new type of metric that offers a user-provided metric identifier and unit of measure. The semantics of custom metrics are defined by you and aren't included in the default OneAgent installation. Custom metrics are sent to Dynatrace through various interfaces. Following the definition of a custom metric, the metric can be reported for multiple monitored components. Each component’s custom metric results in a separate timeseries.

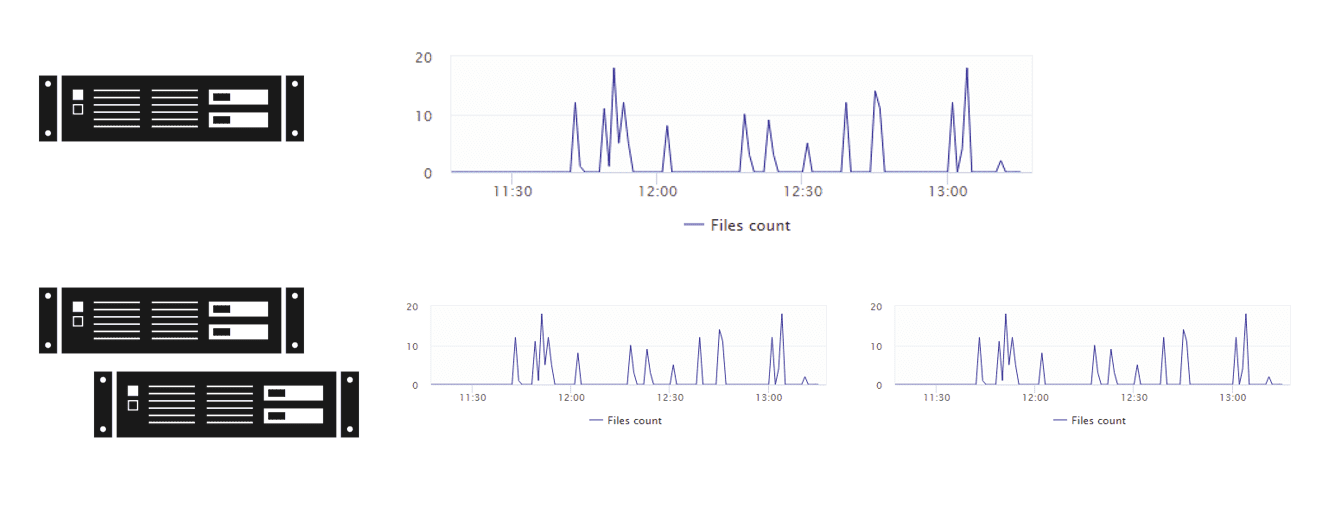

For example, if you define a new custom metric called Files count that counts the newly created files within a directory, this new metric can be collected either for one host or for two individual hosts. Collecting the same metric for two individual hosts results in two timeseries of the same custom metric type, as shown in the example below:

For the purposes of calculating monitoring consumption, collecting the same custom metric for two hosts counts as two separate custom metrics.

Applications

Custom

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:apps | Reported error count (by OS, app version) [custom] The number of all reported errors. | Count | autovalue |

| builtin:apps | Session count (by OS, app version) [custom] The number of captured user sessions. | Count | autovalue |

Mobile

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:apps | Session count (by OS, app version, crash replay feature status) [mobile] The number of captured user sessions. | Count | autovalue |

| builtin:apps | Session count (by OS, app version, full replay feature status) [mobile] The number of captured user sessions. | Count | autovalue |

| builtin:apps | Reported error count (by OS, app version) [mobile] The number of all reported errors. | Count | autovalue |

Web applications

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:apps | User action rate - affected by JavaScript errors (by key user action, user type) [web] The percentage of key user actions with detected JavaScript errors. | Percent (%) | autovalue |

| builtin:apps | Apdex (by key user action) [web] The average Apdex rating for key user actions. | autoavg | |

| builtin:apps | Action count - custom action (by key user action, browser) [web] The number of custom actions that are marked as key user actions. | Count | autovalue |

| builtin:apps | Action count - load action (by key user action, browser) [web] The number of load actions that are marked as key user actions. | Count | autovalue |

| builtin:apps | Action count - XHR action (by key user action, browser) [web] The number of XHR actions that are marked as key user actions. | Count | autovalue |

| builtin:apps | Cumulative Layout Shift - load action (by key user action, user type) [web] The score measuring the unexpected shifting of visible webpage elements. Calculated for load actions that are marked as key user actions. | autoavgcountmaxmedianminpercentilesum | |

| builtin:apps | Cumulative Layout Shift - load action (by key user action, geolocation, user type) [web] The score measuring the unexpected shifting of visible webpage elements. Calculated for load actions that are marked as key user actions. | autoavgcountmaxmedianminpercentilesum | |

| builtin:apps | Cumulative Layout Shift - load action (by key user action, browser) [web] The score measuring the unexpected shifting of visible webpage elements. Calculated for load actions that are marked as key user actions. | autoavgcountmaxmedianminpercentilesum | |

| builtin:apps | DOM interactive - load action (by key user action, browser) [web] The time taken until a page's status is set to "interactive" and it's ready to receive user input. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Action duration - custom action (by key user action, browser) [web] The duration of custom actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Action duration - load action (by key user action, browser) [web] The duration of load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Action duration - XHR action (by key user action, browser) [web] The duration of XHR actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Time to first byte - load action (by key user action, browser) [web] The time taken until the first byte of the response is received from the server, relevant application caches, or a local resource. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Time to first byte - XHR action (by key user action, browser) [web] The time taken until the first byte of the response is received from the server, relevant application caches, or a local resource. Calculated for XHR actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | First Input Delay - load action (by key user action, user type) [web] The time from the first interaction with a page to when the user agent can respond to that interaction. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | First Input Delay - load action (by key user action, geolocation, user type) [web] The time from the first interaction with a page to when the user agent can respond to that interaction. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | First Input Delay - load action (by key user action, browser) [web] The time from the first interaction with a page to when the user agent can respond to that interaction. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Largest Contentful Paint - load action (by key user action, user type) [web] The time taken to render the largest element in the viewport. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Largest Contentful Paint - load action (by key user action, geolocation, user type) [web] The time taken to render the largest element in the viewport. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Largest Contentful Paint - load action (by key user action, browser) [web] The time taken to render the largest element in the viewport. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Load event end - load action (by key user action, browser) [web] The time taken to complete the load event of a page. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Load event start - load action (by key user action, browser) [web] The time taken to begin the load event of a page. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Network contribution - load action (by key user action, user type) [web] The time taken to request and receive resources (including DNS lookup, redirect, and TCP connect time). Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Network contribution - XHR action (by key user action, user type) [web] The time taken to request and receive resources (including DNS lookup, redirect, and TCP connect time). Calculated for XHR actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Response end - load action (by key user action, browser) [web] (AKA HTML downloaded) The time taken until the user agent receives the last byte of the response or the transport connection is closed, whichever comes first. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Response end - XHR action (by key user action, browser) [web] The time taken until the user agent receives the last byte of the response or the transport connection is closed, whichever comes first. Calculated for XHR actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Server contribution - load action (by key user action, user type) [web] The time spent on server-side processing for a page. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Server contribution - XHR action (by key user action, user type) [web] The time spent on server-side processing for a page. Calculated for XHR actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Speed index - load action (by key user action, browser) [web] The score measuring how quickly the visible parts of a page are rendered. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Visually complete - load action (by key user action, browser) [web] The time taken to fully render content in the viewport. Calculated for load actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Visually complete - XHR action (by key user action, browser) [web] The time taken to fully render content in the viewport. Calculated for XHR actions that are marked as key user actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Error count (by key user action, user type, error type, error origin) [web] The number of detected errors that occurred during key user actions. | Count | autovalue |

| builtin:apps | User action count with errors (by key user action, user type) [web] The number of key user actions with detected errors. | Count | autovalue |

| builtin:apps | JavaScript errors count during user actions (by key user action, user type) [web] The number of detected JavaScript errors that occurred during key user actions. | Count | autovalue |

| builtin:apps | JavaScript error count without user actions (by key user action, user type) [web] The number of detected standalone JavaScript errors (occurred between key user actions). | Count | autovalue |

| builtin:apps | User action rate - affected by errors (by key user action, user type) [web] The percentage of key user actions with detected errors. | Percent (%) | autovalue |

| builtin:apps | Action count - custom action (by browser) [web] The number of custom actions. | Count | autovalue |

| builtin:apps | Action count - load action (by browser) [web] The number of load actions. | Count | autovalue |

| builtin:apps | Action count - XHR action (by browser) [web] The number of XHR actions. | Count | autovalue |

| builtin:apps | Action count (by Apdex category) [web] The number of user actions. | Count | autovalue |

| builtin:apps | Action with key performance metric count (by action type, geolocation, user type) [web] The number of user actions that have a key performance metric and mapped geolocation. | Count | autovalue |

| builtin:apps | Action duration - custom action (by browser) [web] The duration of custom actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Action duration - load action (by browser) [web] The duration of load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Action duration - XHR action (by browser) [web] The duration of XHR actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Actions per session average (by users, user type) [web] The average number of user actions per user session. | Count | autoavg |

| builtin:apps | Session count - estimated active sessions (by users, user type) [web] The estimated number of active user sessions. An active session is one in which a user has been confirmed to still be active at a given time. For this high-cardinality metric, the HyperLogLog algorithm is used to approximate the session count. | Count | autovalue |

| builtin:apps | User count - estimated active users (by users, user type) [web] The estimated number of unique active users. For this high-cardinality metric, the HyperLogLog algorithm is used to approximate the user count. | Count | autovalue |

| builtin:apps | User action rate - affected by JavaScript errors (by user type) [web] The percentage of user actions with detected JavaScript errors. | Percent (%) | autovalue |

| builtin:apps | Apdex (by user type) [web] | autoavg | |

| builtin:apps | Apdex (by geolocation, user type) [web] The average Apdex rating for user actions that have a mapped geolocation. | autoavg | |

| builtin:apps | Bounce rate (by users, user type) [web] The percentage of sessions in which users viewed only a single page and triggered only a single web request. Calculated by dividing single-page sessions by all sessions. | Percent (%) | autovalue |

| builtin:apps | Conversion rate - sessions (by users, user type) [web] The percentage of sessions in which at least one conversion goal was reached. Calculated by dividing converted sessions by all sessions. | Percent (%) | autovalue |

| builtin:apps | Session count - converted sessions (by users, user type) [web] The number of sessions in which at least one conversion goal was reached. | Count | autovalue |

| builtin:apps | Cumulative Layout Shift - load action (by user type) [web] The score measuring the unexpected shifting of visible webpage elements. Calculated for load actions. | autoavgcountmaxmedianminpercentilesum | |

| builtin:apps | Cumulative Layout Shift - load action (by geolocation, user type) [web] The score measuring the unexpected shifting of visible webpage elements. Calculated for load actions. | autoavgcountmaxmedianminpercentilesum | |

| builtin:apps | Cumulative Layout Shift - load action (by browser) [web] The score measuring the unexpected shifting of visible webpage elements. Calculated for load actions. | autoavgcountmaxmedianminpercentilesum | |

| builtin:apps | DOM interactive - load action (by browser) [web] The time taken until a page's status is set to "interactive" and it's ready to receive user input. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Session count - estimated ended sessions (by users, user type) [web] The number of completed user sessions. | Count | autovalue |

| builtin:apps | Rage click count [web] The number of detected rage clicks. | Count | autovalue |

| builtin:apps | Time to first byte - load action (by browser) [web] The time taken until the first byte of the response is received from the server, relevant application caches, or a local resource. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Time to first byte - XHR action (by browser) [web] The time taken until the first byte of the response is received from the server, relevant application caches, or a local resource. Calculated for XHR actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | First Input Delay - load action (by user type) [web] The time from the first interaction with a page to when the user agent can respond to that interaction. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | First Input Delay - load action (by geolocation, user type) [web] The time from the first interaction with a page to when the user agent can respond to that interaction. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | First Input Delay - load action (by browser) [web] The time from the first interaction with a page to when the user agent can respond to that interaction. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Largest Contentful Paint - load action (by user type) [web] The time taken to render the largest element in the viewport. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Largest Contentful Paint - load action (by geolocation, user type) [web] The time taken to render the largest element in the viewport. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Largest Contentful Paint - load action (by browser) [web] The time taken to render the largest element in the viewport. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Load event end - load action (by browser) [web] The time taken to complete the load event of a page. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Load event start - load action (by browser) [web] The time taken to begin the load event of a page. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Network contribution - load action (by user type) [web] The time taken to request and receive resources (including DNS lookup, redirect, and TCP connect time). Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Network contribution - XHR action (by user type) [web] The time taken to request and receive resources (including DNS lookup, redirect, and TCP connect time). Calculated for XHR actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Response end - load action (by browser) [web] (AKA HTML downloaded) The time taken until the user agent receives the last byte of the response or the transport connection is closed, whichever comes first. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Response end - XHR action (by browser) [web] The time taken until the user agent receives the last byte of the response or the transport connection is closed, whichever comes first. Calculated for XHR actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Server contribution - load action (by user type) [web] The time spent on server-side processing for a page. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Server contribution - XHR action (by user type) [web] The time spent on server-side processing for a page. Calculated for XHR actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Session duration (by users, user type) [web] The average duration of user sessions. | Microsecond | autoavg |

| builtin:apps | Speed index - load action (by browser) [web] The score measuring how quickly the visible parts of a page are rendered. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Session count - estimated started sessions (by users, user type) [web] The number of started user sessions. | Count | autovalue |

| builtin:apps | Visually complete - load action (by browser) [web] The time taken to fully render content in the viewport. Calculated for load actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Visually complete - XHR action (by browser) [web] The time taken to fully render content in the viewport. Calculated for XHR actions. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Error count (by user type, error type, error origin) [web] The number of detected errors. | Count | autovalue |

| builtin:apps | Error count during user actions (by user type, error type, error origin) [web] The number of detected errors that occurred during user actions. | Count | autovalue |

| builtin:apps | Standalone error count (by user type, error type, error origin) [web] The number of detected standalone errors (occurred between user actions). | Count | autovalue |

| builtin:apps | User action count - with errors (by user type) [web] The number of user actions with detected errors. | Count | autovalue |

| builtin:apps | Error count for Davis (by user type, error type, error origin, error context)) [web] The number of errors that were included in Davis AI problem detection and analysis. | Count | autovalue |

| builtin:apps | Interaction to next paint | Millisecond | autocountmaxmedianminpercentile |

| builtin:apps | JavaScript error count - during user actions (by user type) [web] The number of detected JavaScript errors that occurred during user actions. | Count | autovalue |

| builtin:apps | JavaScript error count - without user actions (by user type) [web] The number of detected standalone JavaScript errors (occurred between user actions). | Count | autovalue |

| builtin:apps | User action rate - affected by errors (by user type) [web] The percentage of user actions with detected errors. | Percent (%) | autovalue |

Mobile and custom apps

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:apps | Apdex (by OS, geolocation) [mobile, custom] The Apdex rating for all captured user actions. | autovalue | |

| builtin:apps | Apdex (by OS, app version) [mobile, custom] The Apdex rating for all captured user actions. | autovalue | |

| builtin:apps | User count - estimated users affected by crashes (by OS) [mobile, custom] The estimated number of unique users affected by a crash. For this high cardinality metric, the HyperLogLog algorithm is used to approximate the number of users. | Count | autovalue |

| builtin:apps | User count - estimated users affected by crashes (by OS, app version) [mobile, custom] The estimated number of unique users affected by a crash. For this high cardinality metric, the HyperLogLog algorithm is used to approximate the number of users. | Count | autovalue |

| builtin:apps | User rate - estimated users affected by crashes (by OS) [mobile, custom] The estimated percentage of unique users affected by a crash. For this high cardinality metric, the HyperLogLog algorithm is used to approximate the number of users. | Percent (%) | autovalue |

| builtin:apps | Crash count (by OS, geolocation) [mobile, custom] The number of detected crashes. | Count | autovalue |

| builtin:apps | Crash count (by OS, app version) [mobile, custom] The number of detected crashes. | Count | autovalue |

| builtin:apps | Crash count (by OS, app version) [mobile, custom] The number of detected crashes. | Count | autovalue |

| builtin:apps | User rate - estimated crash free users (by OS) [mobile, custom] The estimated percentage of unique users not affected by a crash. For this high cardinality metric, the HyperLogLog algorithm is used to approximate the number of users. | Percent (%) | autovalue |

| builtin:apps | Apdex (by key user action, OS) [mobile, custom] The Apdex rating for all captured key user actions. | autovalue | |

| builtin:apps | Action count (by key user action, OS, Apdex category) [mobile, custom] The number of captured key user actions. | Count | autovalue |

| builtin:apps | Action duration (by key user action, OS) [mobile, custom] The duration of key user actions. | Microsecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Reported error count (by key user action, OS) [mobile, custom] The number of reported errors for key user actions. | Count | autovalue |

| builtin:apps | Request count (by key user action, OS) [mobile, custom] The number of captured web requests associated with key user actions. | Count | autovalue |

| builtin:apps | Request duration (by key user action, OS) [mobile, custom] The duration of web requests for key user actions. Be aware that this metric is measured in microseconds while other request duration metrics for mobile and custom apps are measured in milliseconds. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Request error count (by key user action, OS) [mobile, custom] The number of detected web request errors for key user actions. | Count | autovalue |

| builtin:apps | Request error rate (by key user action, OS) [mobile, custom] The percentage of web requests with detected errors for key user actions | Percent (%) | autovalue |

| builtin:apps | New user count (by OS) [mobile, custom] The number of users that launched the application(s) for the first time. The metric is tied to specific devices, so users are counted multiple times if they install the application on multiple devices. The metric doesn't distinguish between multiple users that share the same device and application installation. | Count | autovalue |

| builtin:apps | Request count (by OS, provider) [mobile, custom] The number of captured web requests. | Count | autovalue |

| builtin:apps | Request count (by OS, app version) [mobile, custom] The number of captured web requests. | Count | autovalue |

| builtin:apps | Request error count (by OS, provider) [mobile, custom] The number of detected web request errors. | Count | autovalue |

| builtin:apps | Request error count (by OS, app version) [mobile, custom] The number of detected web request errors. | Count | autovalue |

| builtin:apps | Request error rate (by OS, provider) [mobile, custom] The percentage of web requests with detected errors. | Percent (%) | autovalue |

| builtin:apps | Request error rate (by OS, app version) [mobile, custom] The percentage of web requests with detected errors. | Percent (%) | autovalue |

| builtin:apps | Request duration (by OS, provider) [mobile, custom] The duration of web requests. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Request duration (by OS, app version) [mobile, custom] The duration of web requests. | Millisecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | Session count (by agent version, OS) [mobile, custom] The number of captured user sessions. | Count | autovalue |

| builtin:apps | Session count (by OS, crash reporting level) [mobile, custom] The number of captured user sessions. | Count | autovalue |

| builtin:apps | Session count (by OS, data collection level) [mobile, custom] The number of captured user sessions. | Count | autovalue |

| builtin:apps | Session count - estimated (by OS, geolocation) [mobile, custom] The estimated number of captured user sessions. For this high cardinality metric, the HyperLogLog algorithm is used to approximate the number of sessions. | Count | autovalue |

| builtin:apps | Session count (by OS, app version) [mobile, custom] The number of captured user sessions. | Count | autovalue |

| builtin:apps | Action count (by geolocation, Apdex category) [mobile, custom] The number of captured user actions. | Count | autovalue |

| builtin:apps | Action count (by OS, Apdex category) [mobile, custom] The number of captured user actions. | Count | autovalue |

| builtin:apps | Action count (by OS, app version) [mobile, custom] The number of captured user actions. | Count | autovalue |

| builtin:apps | Action duration (by OS, app version) [mobile, custom] The duration of user actions. | Microsecond | autoavgcountmaxmedianminpercentilesum |

| builtin:apps | User count - estimated (by OS, geolocation) [mobile, custom] The estimated number of unique users that have a mapped geolocation. The metric is based on 'internalUserId'. When 'dataCollectionLevel' is set to 'performance' or 'off', 'internalUserId' is changed at each app start. For this high cardinality metric, the HyperLogLog algorithm is used to approximate the number of users. | Count | autovalue |

| builtin:apps | User count - estimated (by OS, app version) [mobile, custom] The estimated number of unique users. The metric is based on 'internalUserId'. When 'dataCollectionLevel' is set to 'performance' or 'off', 'internalUserId' is changed at each app start. For this high cardinality metric, the HyperLogLog algorithm is used to approximate the number of users. | Count | autovalue |

Billing

Applications

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | Session count - billed and unbilled [custom] The number of billed and unbilled user sessions. To get only the number of billed sessions, set the "Type" filter to "Billed". | Count | autovalue |

| builtin:billing | Total user action and session properties The number of billed user action and user session properties. | Count | autovalue |

| builtin:billing | Session count - billed and unbilled - with Session Replay [mobile] The number of billed and unbilled user sessions that include Session Replay data. To get only the number of billed sessions, set the "Type" filter to "Billed". | Count | autovalue |

| builtin:billing | Session count - billed and unbilled [mobile] The total number of billed and unbilled user sessions (with and without Session Replay data). To get only the number of billed sessions, set the "Type" filter to "Billed". | Count | autovalue |

| builtin:billing | Total user action and session properties The number of billed user action and user session properties. | Count | autovalue |

| builtin:billing | Session count - billed and unbilled - with Session Replay [web] The number of billed and unbilled user sessions that include Session Replay data. To get only the number of billed sessions, set the "Type" filter to "Billed". | Count | autovalue |

| builtin:billing | Session count - billed and unbilled - without Session Replay [web] The number of billed and unbilled user sessions that do not include Session Replay data. To get only the number of billed sessions, set the "Type" filter to "Billed". | Count | autovalue |

| builtin:billing | Total user action and session properties The number of billed user action and user session properties. | Count | autovalue |

Custom events classic

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Total Custom Events Classic billing usage The number of custom events ingested aggregated over all monitored entities. Custom events include events sent to Dynatrace via the Events API or events created by a log event extraction rule. Use this total metric to query longer timeframes without losing precision or performance. | Count | autovalue |

| builtin:billing | (DPS) Custom Events Classic billing usage by monitored entity The number of custom events ingested split by monitored entity. Custom events include events sent to Dynatrace via the Events API or events created by a log event extraction rule. For details on the events billed, refer to the usage_by_event_info metric. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

| builtin:billing | (DPS) Custom Events Classic billing usage by event info The number of custom events ingested split by event info. Custom events include events sent to Dynatrace via the Events API or events created by a log event extraction rule. The info contains the context of the event plus the configuration ID. For details on the related monitored entities, refer to the usage_by_entity metric. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

Custom metrics classic

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Recorded metric data points per metric key The number of reported metric data points split by metric key. This metric does not account for included metric data points available to your environment. | Count | autovalue |

| builtin:billing | (DPS) Total billed metric data points The total number of metric data points after deducting the included metric data points. This is the rate-card value used for billing. Use this total metric to query longer timeframes without losing precision or performance. | Count | autovalue |

| builtin:billing | (DPS) Total metric data points billable for Foundation & Discovery hosts The number of metric data points billable for Foundation & Discovery hosts. | Count | autovalue |

| builtin:billing | (DPS) Total metric data points billed for Full-Stack hosts The number of metric data points billed for Full-Stack hosts. To view the unadjusted usage per host, use builtin:billing.full_stack_monitoring.metric_data_points.ingested_by_host . This trailing metric is reported at 15-minute intervals with up to a 15-minute delay. | Count | autovalue |

| builtin:billing | (DPS) Total metric data points billed for Infrastructure-monitored hosts The number of metric data points billed for Infrastructure-monitored hosts. To view the unadjusted usage per host, use builtin:billing.infrastructure_monitoring.metric_data_points.ingested_by_host . This trailing metric is reported at 15-minute intervals with up to a 15-minute delay. | Count | autovalue |

| builtin:billing | (DPS) Total metric data points billed by other entities The number of metric data points billed that cannot be assigned to a host. The values reported in this metric are not eligible for included metric deduction and will be billed as is. This trailing metric is reported at 15-minute intervals with up to a 15-minute delay. o view the monitored entities that consume this usage, use the other_by_entity metric. | Count | autovalue |

| builtin:billing | (DPS) Billed metric data points reported and split by other entities The number of billed metric data points split by entities that cannot be assigned to a host. The values reported in this metric are not eligible for included metric deduction and will be billed as is. This trailing metric is reported at 15-minute intervals with up to a 15-minute delay. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

Custom traces classic

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Total Custom Traces Classic billing usage The number of spans ingested aggregated over all monitored entities. A span is a single operation within a distributed trace, ingested into Dynatrace. Use this total metric to query longer timeframes without losing precision or performance. | Count | autovalue |

| builtin:billing | (DPS) Custom Traces Classic billing usage by monitored entity The number of spans ingested split by monitored entity. A span is a single operation within a distributed trace, ingested into Dynatrace. For details on span types, refer to the usage_by_span_type metric. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

| builtin:billing | (DPS) Custom Traces Classic billing usage by span type The number of spans ingested split by span type. A span is a single operation within a distributed trace, ingested into Dynatrace. Span kinds can be CLIENT, SERVER, PRODUCER, CONSUMER or INTERNAL For details on the related monitored entities, refer to the usage_by_entity metric. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

DDU

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | DDU events consumption by event info License consumption of Davis data units by events pool split by event info | autovalue | |

| builtin:billing | DDU events consumption by monitored entity License consumption of Davis data units by events pool split by monitored entity | autovalue | |

| builtin:billing | Total DDU events consumption Sum of license consumption of Davis data units aggregated over all monitored entities for the events pool | autovalue | |

| builtin:billing | DDU log consumption by log path License consumption of Davis data units by log pool split by log path | autovalue | |

| builtin:billing | DDU log consumption by monitored entity License consumption of Davis data units by log pool split by monitored entity | autovalue | |

| builtin:billing | Total DDU log consumption Sum of license consumption of Davis data units aggregated over all logs for the log pool | autovalue | |

| builtin:billing | DDU metrics consumption by monitored entity License consumption of Davis data units by metrics pool split by monitored entity | autovalue | |

| builtin:billing | DDU metrics consumption by monitored entity w/o host-unit included DDUs License consumption of Davis data units by metrics pool split by monitored entity (aggregates host-unit included metrics, so value might be higher than actual consumption) | autovalue | |

| builtin:billing | Reported metrics DDUs by metric key Reported Davis data units usage by metrics pool split by metric key | autovalue | |

| builtin:billing | Total DDU metrics consumption Sum of license consumption of Davis data units aggregated over all metrics for the metrics pool | autovalue | |

| builtin:billing | DDU serverless consumption by function License consumption of Davis data units by serverless pool split by Amazon Resource Names (ARNs) | autovalue | |

| builtin:billing | DDU serverless consumption by service License consumption of Davis data units by serverless pool split by service | autovalue | |

| builtin:billing | Total DDU serverless consumption Sum of license consumption of Davis data units aggregated over all services for the serverless pool | autovalue | |

| builtin:billing | DDU traces consumption by span type License consumption of Davis data units by traces pool split by SpanKind, as defined in OpenTelemetry specification | autovalue | |

| builtin:billing | DDU traces consumption by monitored entity License consumption of Davis data units by traces pool split by monitored entity | autovalue | |

| builtin:billing | Total DDU traces consumption Sum of license consumption of Davis data units aggregated over all monitored entities for the traces pool | autovalue | |

| builtin:billing | DDU included per host Included Davis data units per host | autovalue | |

| builtin:billing | DDU included metric data points per host Included metric data points per host | autovalue |

Events

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | [Deprecated] (DPS) Business events usage - Ingest & Process Business events Ingest & Process usage, tracked as bytes ingested within the hour. This trailing metric is reported hourly for the previous hour. Metric values are reported with up to a one-hour delay. [Deprecated] This metric is replaced by billing usage events. | Byte | autovalue |

| builtin:billing | [Deprecated] (DPS) Business events usage - Query Business events Query usage, tracked as bytes read within the hour. This trailing metric is reported hourly for the previous hour. Metric values are reported with up to a one-hour delay. [Deprecated] This metric is replaced by billing usage events. | Byte | autovalue |

| builtin:billing | [Deprecated] (DPS) Business events usage - Retain Business events Retain usage, tracked as total storage used within the hour, in bytes. This trailing metric is reported hourly for the previous hour. Metric values are reported with up to a one-hour delay. [Deprecated] This metric is replaced by billing usage events. | Byte | autoavgmaxmin |

Foundation and discovery

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Ingested metric data points for Foundation & Discovery The number of metric data points aggregated over all Foundation & Discovery hosts. | Count | autovalue |

| builtin:billing | (DPS) Ingested metric data points for Foundation & Discovery per host The number of metric data points split by Foundation & Discovery hosts. See further details. | Count | autovalue |

| builtin:billing | (DPS) Foundation & Discovery billing usage The total number of host-hours being monitored by Foundation & Discovery, counted in 15 min intervals. | Count | autovalue |

| builtin:billing | (DPS) Foundation & Discovery billing usage per host The host-hours being monitored by Foundation & Discovery, counted in 15 min intervals. See further details. | Count | autovalue |

Full stack monitoring

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Available included metric data points for Full-Stack hosts The total number of included metric data points that can be deducted from the metric data points reported by Full-Stack hosts. This trailing metric is reported at 15-minute intervals with up to a 15-minute delay. To view the number of applied included metric data points, use builtin:billing.full_stack_monitoring.metric_data_points.included_used . If the difference between this metric and the applied metrics is greater than 0, then more metrics can be ingested using Full-Stack Monitoring without incurring additional costs. | Count | autovalue |

| builtin:billing | (DPS) Used included metric data points for Full-Stack hosts The number of consumed included metric data points per host monitored with Full-Stack Monitoring. This trailing metric is reported at 15-minute intervals with up to a 15-minute delay. To view the number of potentially available included metrics, use builtin:billing.full_stack_monitoring.metric_data_points.included_used . If the difference between this metric and the available metrics is greater than zero, then that means that more metrics could be ingested on Full-Stack hosts without incurring additional costs. | Count | autovalue |

| builtin:billing | (DPS) Total metric data points reported by Full-Stack hosts The number of metric data points aggregated over all Full-Stack hosts. The values reported in this metric are eligible for included-metric-data-point deduction. Use this total metric to query longer timeframes without losing precision or performance. This trailing metric is reported at 15-minute intervals with up to a 15-minute delay. To view usage on a per-host basis, use builtin:billing.full_stack_monitoring.metric_data_points.ingested_by_host . | Count | autovalue |

| builtin:billing | (DPS) Metric data points reported and split by Full-Stack hosts The number of metric data points split by Full-Stack hosts. The values reported in this metric are eligible for included-metric-data-point deduction. This trailing metric is reported at 15-minute intervals with up to a 15-minute delay. The pool of available included metrics for a "15-minute interval" is visible via builtin:billing.full_stack_monitoring.metric_data_points.included . To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. See further details. | Count | autovalue |

| builtin:billing | (DPS) Full-Stack Monitoring billing usage The total GiB memory of hosts being monitored in full-stack mode, counted in 15 min intervals. Use this total metric to query longer timeframes without losing precision or performance. For details on the hosts causing the usage, refer to the usage_per_host metric. For details on the containers causing the usage, refer to the usage_per_container metric. | GibiByte | autovalue |

| builtin:billing | (DPS) Full-stack usage by container type The total GiB memory of containers being monitored in full-stack mode, counted in 15 min intervals. | GibiByte | autovalue |

| builtin:billing | (DPS) Full-Stack Monitoring billing usage per host The GiB memory per host being monitored in full-stack mode, counted in 15 min intervals. For example, a host with 8 GiB of RAM monitored for 1 hour has 4 data points with a value of | GibiByte | autovalue |

Infrastructure monitoring

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Available included metric data points for Infrastructure-monitored hosts The total number of included metric data points that can be deducted from the metric data points reported by Infrastructure-monitored hosts. This trailing metric is reported at 15-minute intervals with up to a 15-minute delay. To view the number of applied included metric data points, use builtin:billing.infrastructure_monitoring.metric_data_points.included_used . If the difference between this metric and the applied metrics is greater than zero, then that means that more metrics could be ingested on Infrastructure-monitored hosts without incurring additional costs. | Count | autovalue |

| builtin:billing | (DPS) Used included metric data points for Infrastructure-monitored hosts The number of consumed included metric data points for Infrastructure-monitored hosts. This trailing metric is reported at 15-minute intervals with up to a 15-minute delay. To view the number of potentially available included metrics, use builtin:billing.infrastructure_monitoring.metric_data_points.included_used . If the difference between this metric and the available metrics is greater than zero, then that means that more metrics could be ingested on Infrastructure-monitored hosts without incurring additional costs. | Count | autovalue |

| builtin:billing | (DPS) Total metric data points reported by Infrastructure-monitored hosts The number of metric data points aggregated over all Infrastructure-monitored hosts. The values reported in this metric are eligible for included-metric-data-point deduction. Use this total metric to query longer timeframes without losing precision or performance. This trailing metric is reported at 15-minute intervals with up to a 15-minute delay. To view usage on a per-host basis, use the builtin:billing.full_stack_monitoring.metric_data_points.ingested_by_host . | Count | autovalue |

| builtin:billing | (DPS) Metric data points reported and split by Infrastructure-monitored hosts The number of metric data points split by Infrastructure-monitored hosts. The values reported in this metric are eligible for included-metric-data-point deduction. This trailing metric is reported at 15-minute intervals with up to a 15-minute delay. The pool of available included metrics for a "15-minute interval" is visible via builtin:billing.infrastructure_monitoring.metric_data_points.included . To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. See further details. | Count | autovalue |

| builtin:billing | (DPS) Infrastructure Monitoring billing usage The total number of host-hours being monitored in infrastructure-only mode, counted in 15 min intervals. Use this total metric to query longer timeframes without losing precision or performance. For details on the hosts causing the usage, refer to the usage_per_host metric. | Count | autovalue |

| builtin:billing | (DPS) Infrastructure Monitoring billing usage per host The host-hours being monitored in infrastructure-only mode, counted in 15 min intervals. A host monitored for the whole hour has 4 data points with a value of 0.25, regardless of the memory size. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. See further details. | Count | autovalue |

Kubernetes monitoring

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Kubernetes Platform Monitoring billing usage The total number of monitored Kubernetes pods per hour, split by cluster and namespace and counted in 15 min intervals. A pod monitored for the whole hour has 4 data points with a value of 0.25. | Count | autovalue |

Log

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Log Management and Analytics usage - Ingest & Process Log Management and Analytics Ingest & Process usage, tracked as bytes ingested within the hour. This trailing metric is reported hourly for the previous hour. Metric values are reported with up to a one-hour delay. | Byte | autovalue |

| builtin:billing | (DPS) Log Management and Analytics usage - Query Log Management and Analytics Query usage, tracked as bytes read within the hour. This trailing metric is reported hourly for the previous hour. Metric values are reported with up to a one-hour delay. | Byte | autovalue |

| builtin:billing | (DPS) Log Management and Analytics usage - Retain Log Management and Analytics Retain usage, tracked as total storage used within the hour, in bytes. This trailing metric is reported hourly for the previous hour. Metric values are reported with up to a one-hour delay. | Byte | autoavgmaxmin |

Log monitoring classic

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Total Log Monitoring Classic billing usage The number of log records ingested aggregated over all monitored entities. A log record is recognized by either a timestamp or a JSON object. Use this total metric to query longer timeframes without losing precision or performance. | Count | autovalue |

| builtin:billing | (DPS) Log Monitoring Classic billing usage by monitored entity The number of log records ingested split by monitored entity. A log record is recognized by either a timestamp or a JSON object. For details on the log path, refer to the usage_by_log_path metric. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

| builtin:billing | (DPS) Log Monitoring Classic billing usage by log path The number of log records ingested split by log path. A log record is recognized by either a timestamp or a JSON object. For details on the related monitored entities, refer to the usage_by_entity metric. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

Mainframe monitoring

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Mainframe Monitoring billing usage The total number of MSU-hours being monitored, counted in 15 min intervals. | MSU | autovalue |

Real user monitoring

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Total Real-User Monitoring Property (mobile) billing usage (Mobile) User action and session properties count. For details on how usage is calculated, refer to the documentation or builtin:billing.real_user_monitoring.web.property.usage_by_application . Use this total metric to query longer timeframes without losing precision or performance. | Count | autovalue |

| builtin:billing | (DPS) Real-User Monitoring Property (mobile) billing usage by application (Mobile) User action and session properties count by application. The billed value is calculated based on the number of sessions reported in builtin:billing.real_user_monitoring.mobile.session.usage_by_app + builtin:billing.real_user_monitoring.mobile.session_with_replay.usage_by_app . plus the number of configured properties that exceed the included number of properties (free of charge) offered for a given application. Data points are only written for billed sessions. If the value is 0, you have available metric data points. This trailing metric is reported hourly for the previous hour. Metric values are reported with up to a one-hour delay. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

| builtin:billing | (DPS) Total Real-User Monitoring (mobile) billing usage (Mobile) Session count without Session Replay. The value billed for each session is the session duration measured in hours. So a 3-hour session results in a single data-point value of | Count | autovalue |

| builtin:billing | (DPS) Real-User Monitoring (mobile) billing usage by application (Mobile) Session count without Session Replay split by application The value billed for each session is the session duration measured in hours. So a 3-hour session results in a single data-point value of | Count | autovalue |

| builtin:billing | (DPS) Total Real-User Monitoring (mobile) with Session Replay billing usage (Mobile) Session count with Session Replay. The value billed for each session is the session duration measured in hours. So a 3-hour session results in a single data-point value of | Count | autovalue |

| builtin:billing | (DPS) Real-User Monitoring (mobile) with Session Replay billing usage by application (Mobile) Session count with Session Replay split by application. The value billed for each session is the session duration measured in hours. So a 3-hour session results in a single data-point value of | Count | autovalue |

| builtin:billing | (DPS) Total Real-User Monitoring Property (web) billing usage (Web) User action and session properties count. For details on how usage is calculated, refer to the documentation or builtin:billing.real_user_monitoring.web.property.usage_by_application . Use this total metric to query longer timeframes without losing precision or performance. | Count | autovalue |

| builtin:billing | (DPS) Real-User Monitoring Property (web) billing usage by application (Web) User action and session properties count by application. The billed value is calculated based on the number of sessions reported in builtin:billing.real_user_monitoring.web.session.usage_by_app + builtin:billing.real_user_monitoring.web.session_with_replay.usage_by_app . plus the number of configured properties that exceed the included number of properties (free of charge) offered for a given application. Data points are only written for billed sessions. If the value is 0, you have available metric data points. This trailing metric is reported hourly for the previous hour. Metric values are reported with up to a one-hour delay. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

| builtin:billing | (DPS) Total Real-User Monitoring (web) billing usage (Web) Session count without Session Replay. The value billed for each session is the session duration measured in hours. So a 3-hour session results in a single data-point value of | Count | autovalue |

| builtin:billing | (DPS) Real-User Monitoring (web) billing usage by application (Web) Session count without Session Replay split by application. The value billed for each session is the session duration measured in hours. So a 3-hour session results in a single data-point value of | Count | autovalue |

| builtin:billing | (DPS) Total Real-User Monitoring (web) with Session Replay billing usage (Web) Session count with Session Replay. The value billed for each session is the session duration measured in hours. So a 3-hour session results in a single data-point value of | Count | autovalue |

| builtin:billing | (DPS) Real-User Monitoring (web) with Session Replay billing usage by application (Web) Session count with Session Replay split by application. The value billed for each session is the session duration measured in hours. So a 3-hour session results in a single data-point value of | Count | autovalue |

Runtime application protection

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Runtime Application Protection billing usage Total GiB-memory of hosts protected with Runtime Application Protection (Application Security), counted at 15-minute intervals. Use this total metric to query longer timeframes without losing precision or performance. For details on the monitored hosts, refer to the usage_per_host metric. | GibiByte | autovalue |

| builtin:billing | (DPS) Runtime Application Protection billing usage per host GiB-memory per host protected with Runtime Application Protection (Application Security), counted at 15-minute intervals. For example, a host with 8 GiB of RAM monitored for 1 hour has 4 data points with a value of | GibiByte | autovalue |

Runtime vulnerability analytics

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Runtime Vulnerability Analytics billing usage Total GiB-memory of hosts protected with Runtime Vulnerability Analytics (Application Security), counted at 15-minute intervals. Use this total metric to query longer timeframes without losing precision or performance. For details on the monitored hosts, refer to the usage_per_host metric. | GibiByte | autovalue |

| builtin:billing | (DPS) Runtime Vulnerability Analytics billing usage per host GiB-memory per hosts protected with Runtime Vulnerability Analytics (Application Security), counted at 15-minute intervals. For example, a host with 8 GiB of RAM monitored for 1 hour has 4 data points with a value of | GibiByte | autovalue |

Serverless functions classic

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | (DPS) Total Serverless Functions Classic billing usage The number of invocations of the serverless function aggregated over all monitored entities. The term "function invocations" is equivalent to "function requests" or "function executions". Use this total metric to query longer timeframes without losing precision or performance. | Count | autovalue |

| builtin:billing | (DPS) Serverless Functions Classic billing usage by monitored entity The number of invocations of the serverless function split by monitored entity. The term "function invocations" is equivalent to "function requests" or "function executions". For details on which functions are invoked, refer to the usage_by_function metric. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

| builtin:billing | (DPS) Serverless Functions Classic billing usage by function The number of invocations of the serverless function split by function. The term "function invocations" is equivalent to "function requests" or "function executions". For details on the related monitored entities, refer to the usage_by_entity metric. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

Synthetic

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:billing | Actions The number of billed actions consumed by browser monitors. | Count | autovalue |

| builtin:billing | (DPS) Total Browser Monitor or Clickpath billing usage The number of synthetic actions which triggers a web request that includes a page load, navigation event, or action that triggers an XHR or Fetch request. Scroll downs, keystrokes, or clicks that don't trigger web requests aren't counted as such actions. Use this total metric to query longer timeframes without losing precision or performance. | Count | autovalue |

| builtin:billing | (DPS) Browser Monitor or Clickpath billing usage per synthetic browser monitor The number of synthetic actions which triggers a web request that includes a page load, navigation event, or action that triggers an XHR or Fetch request. Scroll downs, keystrokes, or clicks that don't trigger web requests aren't counted as such actions. Actions are split by the Synthetic Browser Monitors that caused them. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

| builtin:billing | Third-party results The number of billed results consumed by third-party monitors. | Count | autovalue |

| builtin:billing | (DPS) Total Third-Party Synthetic API Ingestion billing usage The number of synthetic test results pushed into Dynatrace with Synthetic 3rd party API. Use this total metric to query longer timeframes without losing precision or performance. | Count | autovalue |

| builtin:billing | (DPS) Third-Party Synthetic API Ingestion billing usage per external browser monitor The number of synthetic test results pushed into Dynatrace with Synthetic 3rd party API. The ingestions are split by external Synthetic Browser Monitors for which the results where ingested. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

| builtin:billing | Requests The number of billed requests consumed by HTTP monitors. | Count | autovalue |

| builtin:billing | (DPS) Total HTTP monitor billing usage The number of HTTP requests performed during execution of synthetic HTTP monitor. Use this total metric to query longer timeframes without losing precision or performance. | Count | autovalue |

| builtin:billing | (DPS) HTTP monitor billing usage per HTTP monitor The number of HTTP requests performed, split by synthetic HTTP monitor. To improve performance and avoid exceeding query limits when working with longer timeframes, use the total metric. | Count | autovalue |

Cloud

AWS

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:cloud | Number of running EC2 instances (AZ) | Count | autoavgmaxmin |

Azure

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:cloud | Number of starting VMs in region | Count | autoavgmaxmin |

| builtin:cloud | Number of active VMs in region | Count | autoavgmaxmin |

| builtin:cloud | Number of stopped VMs in region | Count | autoavgmaxmin |

| builtin:cloud | Number of starting VMs in scale set | Count | autoavgmaxmin |

| builtin:cloud | Number of active VMs in scale set | Count | autoavgmaxmin |

| builtin:cloud | Number of stopped VMs in scale set | Count | autoavgmaxmin |

Cloud Foundry

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:cloud | CF: Time to fetch cell states The time that the auctioneer took to fetch state from all the cells when running its auction. | Nanosecond | autoavgmaxmin |

| builtin:cloud | CF: App instance placement failures The number of application instances that the auctioneer failed to place on Diego cells. | Count | autovalue |

| builtin:cloud | CF: App instance starts The number of application instances that the auctioneer successfully placed on Diego cells. | Count | autovalue |

| builtin:cloud | CF: Task placement failures The number of tasks that the auctioneer failed to place on Diego cells. | Count | autovalue |

| builtin:cloud | CF: 502 responses The number of responses that indicate invalid service responses produced by an application. | Count | autovalue |

| builtin:cloud | CF: Response latency The average response time from the application to clients. | Millisecond | autoavgmaxmin |

| builtin:cloud | CF: 5xx responses The number of responses that indicate repeatedly crashing apps or response issues from applications. | Count | autovalue |

| builtin:cloud | CF: Total requests The number of all requests representing the overall traffic flow. | Count | autovalue |

Openstack

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:cloud | CPU usage | Percent (%) | autoavgmaxmin |

| builtin:cloud | Disk allocation | Byte | autoavgmaxmin |

| builtin:cloud | Disk capacity | Byte | autoavgmaxmin |

| builtin:cloud | Memory resident | Byte | autoavgmaxmin |

| builtin:cloud | Memory usage | Byte | autoavgmaxmin |

| builtin:cloud | Network incoming bytes rate | Byte | autoavgmaxmin |

| builtin:cloud | Network outgoing bytes rate | Byte | autoavgmaxmin |

VMware

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:cloud | Host CPU usage % | Percent (%) | autoavgmaxmin |

| builtin:cloud | Host disk usage rate | kB | autoavgmaxmin |

| builtin:cloud | Host disk commands aborted | Count | autovalue |

| builtin:cloud | Host disk queue latency | Millisecond | autoavgmaxmin |

| builtin:cloud | Host disk read IOPS | Per second | autoavgmaxmin |

| builtin:cloud | Host disk read latency | Millisecond | autoavgmaxmin |

| builtin:cloud | Host disk read rate | kB | autoavgmaxmin |

| builtin:cloud | Host disk write IOPS | Per second | autoavgmaxmin |

| builtin:cloud | Host disk write latency | Millisecond | autoavgmaxmin |

| builtin:cloud | Host disk write rate | kB | autoavgmaxmin |

| builtin:cloud | Host compression rate | Kibibyte | autoavgmaxmin |

| builtin:cloud | Host memory consumed | Kibibyte | autoavgmaxmin |

| builtin:cloud | Host decompression rate | Kibibyte | autoavgmaxmin |

| builtin:cloud | Host swap in rate | Kibibyte | autoavgmaxmin |

| builtin:cloud | Host swap out rate | Kibibyte | autoavgmaxmin |

| builtin:cloud | Host network data received rate | kB | autoavgmaxmin |

| builtin:cloud | Host network data transmitted rate | kB | autoavgmaxmin |

| builtin:cloud | Data received rate | kB | autoavgmaxmin |

| builtin:cloud | Data transmitted rate | kB | autoavgmaxmin |

| builtin:cloud | Packets received dropped | Count | autovalue |

| builtin:cloud | Packets transmitted dropped | Count | autovalue |

| builtin:cloud | Number of VMs | Count | autoavgmaxmin |

| builtin:cloud | Number of VMs powered-off | Count | autoavgmaxmin |

| builtin:cloud | Number of VMs suspended | Count | autoavgmaxmin |

| builtin:cloud | Host availability % | Percent (%) | autoavg |

| builtin:cloud | VM CPU ready % | Percent (%) | autoavgmaxmin |

| builtin:cloud | VM swap wait | Millisecond | autovalue |

| builtin:cloud | VM CPU usage MHz | Count | autoavgmaxmin |

| builtin:cloud | VM CPU usage % | Percent (%) | autoavgmaxmin |

| builtin:cloud | VM disk usage rate | kB | autoavgmaxmin |

| builtin:cloud | VM memory active | Kibibyte | autoavgmaxmin |

| builtin:cloud | VM compression rate | Kibibyte | autoavgmaxmin |

| builtin:cloud | VM memory consumed | Kibibyte | autoavgmaxmin |

| builtin:cloud | VM decompression rate | Kibibyte | autoavgmaxmin |

| builtin:cloud | VM swap in rate | Kibibyte | autoavgmaxmin |

| builtin:cloud | VM swap out rate | Kibibyte | autoavgmaxmin |

| builtin:cloud | VM network data received rate | kB | autoavgmaxmin |

| builtin:cloud | VM network data transmitted rate | kB | autoavgmaxmin |

Containers

CPU

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:containers | Containers: CPU limit, mCores CPU resource limit per container in millicores. | Millicores | autoavgmaxmin |

| builtin:containers | Containers: CPU logical cores Number of logical CPU cores of the host. | Cores | autoavgmaxmin |

| builtin:containers | Containers: CPU shares Number of CPU shares allocated per container. | Count | autoavgmaxmin |

| builtin:containers | Containers: CPU throttling, mCores CPU throttling per container in millicores. | Millicores | autoavgmaxmin |

| builtin:containers | Containers: CPU throttled time, ns/min Total amount of time a container has been throttled, in nanoseconds per minute. | Nanosecond | autoavgmaxmin |

| builtin:containers | Containers: CPU usage, mCores CPU usage per container in millicores | Millicores | autoavgmaxmin |

| builtin:containers | Containers: CPU usage, % of limit Percent CPU usage per container relative to CPU resource limit. Logical cores are used if CPU limit isn't set. | Percent (%) | autoavgmaxmin |

| builtin:containers | Containers: CPU system usage, mCores CPU system usage per container in millicores. | Millicores | autoavgmaxmin |

| builtin:containers | Containers: CPU system usage time, ns/min Used system time per container in nanoseconds per minute. | Nanosecond | autoavgmaxmin |

| builtin:containers | Containers: CPU usage time, ns/min Sum of used system and user time per container in nanoseconds per minute. | Nanosecond | autoavgmaxmin |

| builtin:containers | Containers: CPU user usage, mCores CPU user usage per container in millicores. | Millicores | autoavgmaxmin |

| builtin:containers | Containers: CPU user usage time, ns/min Used user time per container in nanoseconds per minute. | Nanosecond | autoavgmaxmin |

Memory

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:containers | Containers: Memory cache, bytes Page cache memory per container in bytes. | Byte | autoavgmaxmin |

| builtin:containers | Containers: Memory limit, bytes Memory limit per container in bytes. If no limit is set, this is an empty value. | Byte | autoavgmaxmin |

| builtin:containers | Containers: Memory limit, % of physical memory Percent memory limit per container relative to total physical memory. If no limit is set, this is an empty value. | Percent (%) | autoavg |

| builtin:containers | Containers: Memory - out of memory kills Number of out of memory kills for a container. | Count | autovalue |

| builtin:containers | Containers: Memory - total physical memory, bytes Total physical memory on the host in bytes. | Byte | autoavgmaxmin |

| builtin:containers | Containers: Memory usage, bytes Resident set size (Linux) or private working set size (Windows) per container in bytes. | Byte | autoavgmaxmin |

| builtin:containers | Containers: Memory usage, % of limit Resident set size (Linux) or private working set size (Windows) per container in percent relative to container memory limit. If no limit is set, this equals total physical memory. | Percent (%) | autoavgmaxmin |

Dashboards

Other dashboards metrics

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:dashboards | Dashboard view count | Count | autovalue |

Infrastructure

Availability

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:host | Host availability Host availability state metric reported in 1 minute intervals | Count | autovalue |

CPU

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:host | z/OS General CPU usage The percent of the general-purpose central processor (GCP) used | Percent (%) | autoavgmaxmin |

| builtin:host | z/OS Rolling 4 hour MSU average The 4h average of consumed million service units on this LPAR | MSU | autoavgmaxmin |

| builtin:host | z/OS MSU capacity The over all capacity of million service units on this LPAR | MSU | autoavgmaxmin |

| builtin:host | z/OS zIIP eligible time The zIIP eligible time spent on the general-purpose central processor (GCP) after process start per minute | Second | autoavgmaxmin |

| builtin:host | AIX Entitlement configured Capacity Entitlement is the number of virtual processors assigned to the AIX partition. It's measured in fractions of processor equal to 0.1 or 0.01. For more information about entitlement, see Assigning the appropriate processor entitled capacity in official IBM documentation. | Ratio | autoavgmaxmin |

| builtin:host | AIX Entitlement used Percentage of entitlement used. Capacity Entitlement is the number of virtual cores assigned to the AIX partition. See for more information about entitlement, see Assigning the appropriate processor entitled capacity in official IBM documentation. | Percent (%) | autoavgmaxmin |

| builtin:host | CPU idle Average CPU time, when the CPU didn't have anything to do | Percent (%) | autoavgmaxmin |

| builtin:host | CPU I/O wait Percentage of time when CPU was idle during which the system had an outstanding I/O request. It is not available on Windows. | Percent (%) | autoavgmaxmin |

| builtin:host | System load The average number of processes that are being executed by CPU or waiting to be executed by CPU over the last minute | Ratio | autoavgmaxmin |

| builtin:host | System load15m The average number of processes that are being executed by CPU or waiting to be executed by CPU over the last 15 minutes | Ratio | autoavgmaxmin |

| builtin:host | System load5m The average number of processes that are being executed by CPU or waiting to be executed by CPU over the last 5 minutes | Ratio | autoavgmaxmin |

| builtin:host | CPU other Average CPU time spent on other tasks: servicing interrupt requests (IRQ), running virtual machines under the control of the host's kernel (meaning the host is a hypervisor for VMs). It's available only for Linux hosts | Percent (%) | autoavgmaxmin |

| builtin:host | AIX Physical consumed Total CPUs consumed by the AIX partition | Ratio | autoavgmaxmin |

| builtin:host | CPU steal Average CPU time, when a virtual machine waits to get CPU cycles from the hypervisor. In a virtual environment, CPU cycles are shared across virtual machines on the hypervisor server. If your virtualized host displays a high CPU steal, it means CPU cycles are being taken away from your virtual machine to serve other purposes. It may indicate an overloaded hypervisor. It's available only for Linux hosts | Percent (%) | autoavgmaxmin |

| builtin:host | CPU system Average CPU time when CPU was running in kernel mode | Percent (%) | autoavgmaxmin |

| builtin:host | CPU usage % Percentage of CPU time when CPU was utilized. A value close to 100% means most host processing resources are in use, and host CPUs can't handle additional work | Percent (%) | autoavgmaxmin |

| builtin:host | CPU user Average CPU time when CPU was running in user mode | Percent (%) | autoavgmaxmin |

DNS

| Metric key | Name and description | Unit | Aggregations |

|---|---|---|---|

| builtin:host | Number of DNS errors by type Number of DNS errors by type | Count | autoavgcountmaxminsum |

| builtin:host | Number of orphaned DNS responses Number of orphaned DNS responses on the host | Count | autoavgcountmaxminsum |

| builtin:host | Number of DNS queries Number of DNS queries on the host | Count | autoavgcountmaxminsum |