Configure monitoring capabilities of Dynatrace Android Gradle plugin

- How-to guide

- 25-min read

With the following configuration options, you can customize OneAgent monitoring capabilities and fine-tune the auto-instrumentation process for these features.

User action monitoring

OneAgent creates user actions based on the UI components that trigger these actions and automatically combines user action data with other monitoring data, such as information on web requests and crashes. OneAgent extends the lifetime of user actions to properly aggregate them with other events that are executed in a background thread or immediately after a user action.

Configure user action monitoring

You can configure user action monitoring using the following properties:

All properties related to user action monitoring are part of UserAction DSL, so configure them via the userActions block.

dynatrace {configurations {sampleConfig {userActions {// your user action monitoring configuration}}}}

timeout and maxDuration properties

With the timeout property, you can configure the time during which OneAgent can add other events to the newly created user action. When another user interaction is detected, OneAgent stops adding events to the user action of the previous interaction, regardless of the configured timeout value. Instead, OneAgent adds events only to the user action from the current user interaction.

When the timeout time period expires, OneAgent checks if there are open events and waits until these events are completed. With the maxDuration property, you can configure the maximum duration of these user actions. If an open event, for example, a web request, is still not finished after this period, OneAgent removes these events from the user action and closes the user action with an appropriate end time value.

Specify the value for both properties in milliseconds. The value of the maxDuration property must be equal to or greater than the value of the timeout property.

| Property | Default value | Possible values |

|---|---|---|

timeout | 500 | 100 – 5000 |

maxDuration | 60000 | 100 – 540000 |

You can configure only one value for the timeout and maxDuration properties each, and these values must fit all user actions on all devices.

emptyActions property

OneAgent also reports user actions that don't contain child events. To discard such user actions, use the emptyActions property.

Disable user action monitoring

You can completely deactivate user action monitoring with the enabled property. In this case, all other properties are ignored, so only specify the enabled property to avoid confusion.

dynatrace {configurations {sampleConfig {userActions.enabled false}}}

User action monitoring sensors

The plugin automatically instruments the following classes and methods:

Library/framework

Instrumented classes/methods

Sensor

Android

android.view.View$OnClickListener

click

Android

android.widget.AdapterView$OnItemClickListener

itemClick

Android

android.widget.AdapterView$OnItemSelectedListener

itemSelect

Android

android.app.Activity.onOptionsItemSelected

optionSelect

Android

android.view.MenuItem$OnMenuItemClickListener

menuClick

AndroidX

androidx.viewpager.widget.ViewPager$OnPageChangeListener

pageChange

AndroidX

androidx.swiperefreshlayout.widget.SwipeRefreshLayout$OnRefreshListener

refresh

Android Support

android.support.v4.view.ViewPager$OnPageChangeListener

pageChange

Android Support

android.support.v4.widget.SwipeRefreshLayout$OnRefreshListener

refresh

Jetpack Compose

Modifier.combinedClickable

composeClickable

Jetpack Compose

Modifier.toggleable

composeClickable

Jetpack Compose

Modifier.swipeable

composeSwipeable

Jetpack Compose

Modifier.pullRefresh

composePullRefresh

Jetpack Compose

Slider

RangeSlider

composeSlider

Jetpack Compose

HorizontalPager

VerticalPager

composePager

Jetpack Compose

Responsible for providing semantics information for user actions.

This sensor doesn't detect any user input.

composeSemantics

You can deactivate specific sensors via the UserAction Sensor DSL properties and configure it inside the sensors block.

dynatrace {configurations {sampleConfig {userActions {sensors {// fine-tune the sensors if necessarypageChange falserefresh false}}}}}

User action naming

To construct user action names, OneAgent captures the control title from different attributes depending on the listener or method used. See the table below for details.

Listener, method, or component

Evaluated attribute/property

android.view.View$OnClickListener

android.widget.AdapterView$OnItemClickListener

android.widget.AdapterView$OnItemSelectedListener

Attributes are evaluated in the following order:

android:contentDescription attributeandroid:text attribute forTextView-based components- Class name

android.app.Activity.onOptionsItemSelected

android.view.MenuItem$OnMenuItemClickListener

getTitle for menu items

androidx.viewpager.widget.ViewPager$OnPageChangeListener

androidx.swiperefreshlayout.widget.SwipeRefreshLayout$OnRefreshListener

android.support.v4.view.ViewPager$OnPageChangeListener

android.support.v4.widget.SwipeRefreshLayout$OnRefreshListener

Action type is used as an action name, as no UI component is available

Properties are evaluated in the following order:

Mask user actions

Dynatrace Android Gradle plugin version 8.249+

By default, user action names are derived from UI control titles, for example, button or menu item titles. In rare circumstances, email addresses, usernames, or other personal information might be unintentionally included in user action names. This happens when this information is included in parameters used for control titles, resulting in user action names such as Touch on Account 123456.

If such personal information appears in your application's user action names, enable user action masking. OneAgent will replace all Touch on <control title> action names with the class name of the control that the user touched, for example:

Touch on Account 123456>Touch on ButtonTouch on Transfer all amount>Touch on SwitchTouch on Account settings>Touch on ActionMenuItem

You can enable user action masking with the namePrivacy property of the UserAction DSL.

dynatrace {configurations {sampleConfig {userActions {namePrivacy true}}}}

If you enable the namePrivacy property, it will also mask user actions for Jetpack Compose UI components, and some component metadata won't be captured.

If you want to change names only for certain user actions, use one of the following settings:

- Modify autogenerated actions to change user action names

- Set naming rules (mobile app settings > Naming rules) to configure user action naming rules or extraction rules

Modify user actions

OneAgent for Android creates user actions based on interactions of your application's users. These actions are different from custom actions and are sometimes called autogenerated actions. We also refer to them as user actions.

You can modify or even cancel user actions.

If you want to avoid capturing personal information for all user actions at once, see Mask user actions.

For Jetpack Compose UI components, there is an additional option available for setting a custom user action name.

Modify a specific user action

With Dynatrace.modifyUserAction, you can modify the current user action. You can change the user action name and report events, values, and errors. You can also cancel a user action.

The Dynatrace.modifyUserAction method accepts an implementation of UserActionModifier as a parameter, which provides you with the current mutable ModifiableUserAction object.

Allowed operations on this user action object are as follows:

getActionNamesetActionNamereportEventreportValuereportError- OneAgent for Android version 8.241+

cancel

You can modify a user action only while it is still open. If the user action times out before it is modified, the modification has no effect. We recommend that you invoke Dynatrace.modifyUserAction inside the instrumented listener method and don't call this method from a different thread.

In the following example, we're using a calculator app to show you how to change the name of the user action that is created for the button click and how to report a value to the action.

button.setOnClickListener(new View.OnClickListener() {@Overridepublic void onClick(View v) {int result = calc();// we use Java 8 language features here. You can also use anonymous classes instead of lambdasDynatrace.modifyUserAction(userAction -> {userAction.setActionName("Click on Calculate");userAction.reportValue("Calculated result", result);});showResult(result);}});

For a mobile custom action or a mobile autogenerated user action, the maximum name length is 250 characters.

Modify any user action

OneAgent for Android version 8.241+

You can modify user actions via Dynatrace.modifyUserAction. However, you can do that only for a specific user action, and you usually should know whether this user action is still open or not.

To overcome these limitations, we introduced a feature that allows you to receive a callback for every newly created user action. With this approach, you are notified about every new autogenerated user action, so you get a chance to update the user action name as well as report events, values, and errors. You can also cancel a user action.

You can register a callback that is invoked for each user action. UserActionModifier is set once at OneAgent startup via the DynatraceConfigurationBuilder#withAutoUserActionModifier method. After that, it is invoked each time OneAgent creates a user action. It is not invoked for custom actions.

You can register a callback only when OneAgent is started, so you need to start OneAgent manually.

Allowed operations are as follows:

getActionNamesetNamereportEventreportValuereportErrorcancel

Dynatrace.startup(this, new DynatraceConfigurationBuilder("<YourApplicationID>", "<ProvidedBeaconUrl>").withAutoUserActionModifier(modifiableAction -> {if (modifiableAction.getActionName().contains("account ID")) {// remove personal information from the user action namemodifiableAction.setActionName("Touch on Account");}}).buildConfiguration());

For a mobile custom action or a mobile autogenerated user action, the maximum name length is 250 characters.

Android data binding library

The Dynatrace Android Gradle plugin can instrument event logic and listeners that are defined via the data binding feature. If your app contains code similar to the official listener binding example, the plugin can detect the correct bytecode and instrument it.

<?xml version="1.0" encoding="utf-8"?><layout xmlns:android="http://schemas.android.com/apk/res/android"><data><variable name="task" type="com.android.example.Task" /><variable name="presenter" type="com.android.example.Presenter" /></data><LinearLayout android:layout_width="match_parent" android:layout_height="match_parent"><Button android:layout_width="wrap_content" android:layout_height="wrap_content"android:onClick="@{() -> presenter.onSaveClick(task)}" /></LinearLayout></layout>

Define an event handler via XML attribute

The following example from the Android documentation shows how you can define an event handler via XML attributes.

<?xml version="1.0" encoding="utf-8"?><Button xmlns:android="http://schemas.android.com/apk/res/android"android:id="@+id/button_send"android:layout_width="wrap_content"android:layout_height="wrap_content"android:text="@string/button_send"android:onClick="sendMessage" />

/** Called when the user touches the button */public void sendMessage(View view) {// Do something in response to button click}

The Dynatrace Android Gradle plugin cannot determine the relationship between the button in the layout XML file and the sendMessage method in the activity. However, when your app uses the Appcompat library and your activities are derived from androidx.appcompat.app.AppCompatActivity, the plugin auto-instruments the delegation logic of the Appcompat library. If you don't use the Appcompat library, you must manually instrument these event handler methods because the plugin is unable to determine the connection between the bytecode and the layout XML file.

User action monitoring for Jetpack Compose

Dynatrace Android Gradle plugin version 8.263+

Dynatrace supports the auto-instrumentation of Jetpack Compose UI components. OneAgent creates user actions based on the UI components that trigger these actions and automatically combines user action data with other monitoring data, such as information on web requests and crashes.

Jetpack Compose auto-instrumentation is enabled by default starting with Dynatrace Android Gradle plugin version 8.271.

See Technology support | Mobile app Real User Monitoring for more details.

Supported UI components

We support auto-instrumentation of standard and custom components with default user interactions from the Jetpack Compose UI framework. The table below contains these components and user interactions as well as several UI components that are based on these instrumented user interactions.

Components and user interactions

Example components

Dynatrace Android Gradle plugin version 8.267+

Second version of Modifier.pullRefresh is not supported.

Supported versions of Jetpack Compose: 1.3–1.5.

Disable user action monitoring

You can disable user action monitoring for Jetpack Compose with the composeEnabled property of the UserAction DSL. Note that all other Jetpack Compose sensor properties are ignored when Jetpack Compose auto-instrumentation is disabled, so only specify the composeEnabled property to avoid confusion.

dynatrace {configurations {sampleConfig {userActions {composeEnabled false}}}}

User action monitoring sensors

The following sensors are supported for the Jetpack Compose auto-instrumentation. For more details, check the overview table with all sensors.

composeClickablecomposeSwipeablecomposeSemantics

You can deactivate specific sensors via the UserAction Sensor DSL.

We recommend that you do not disable the composeSemantics sensor, as it's responsible for generating proper user action names.

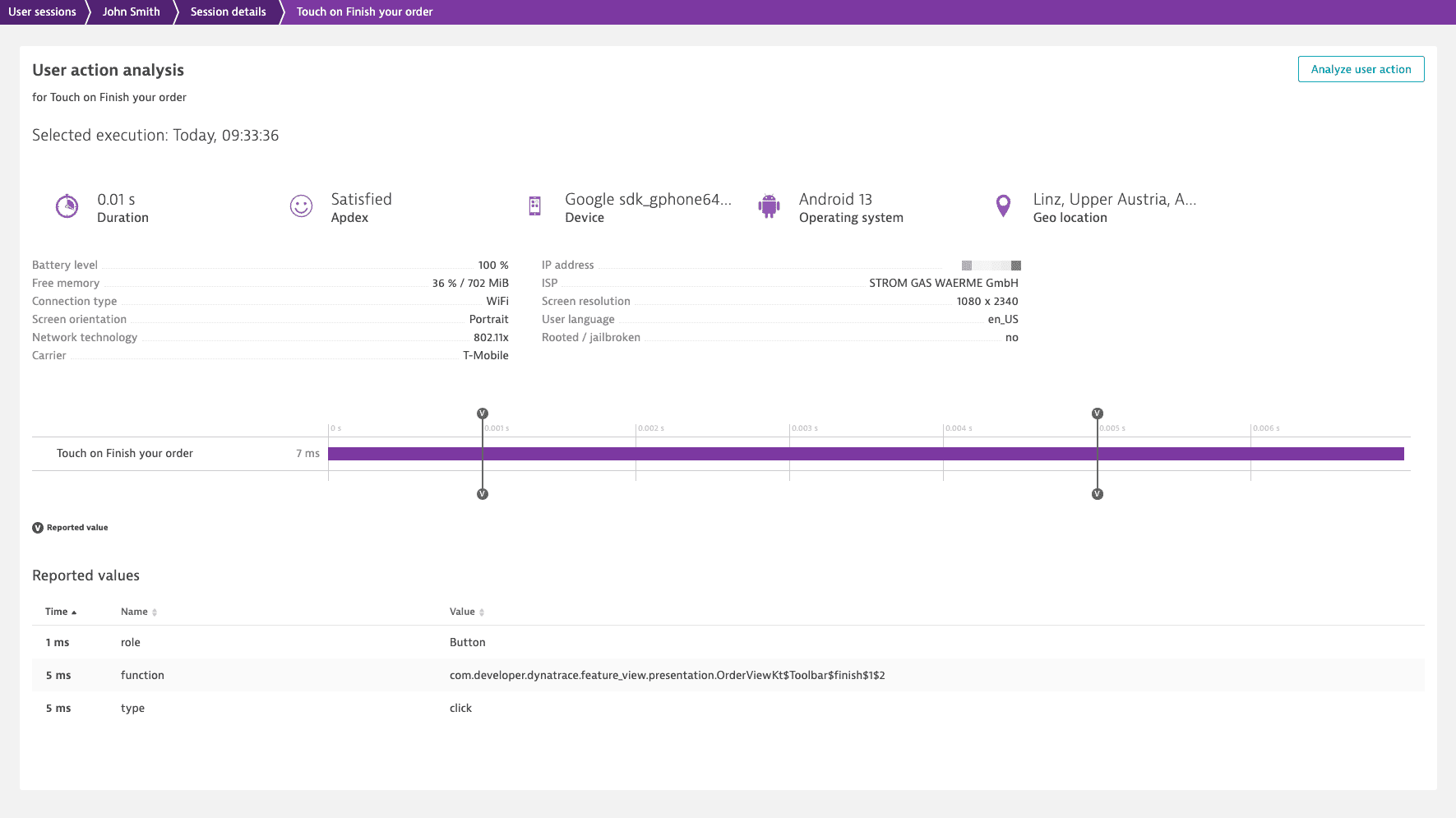

If you disable this sensor, OneAgent uses the class name of the instrumented component in the user action name. For example, Touch on Finish your order might change to something like Touch on Button with function OrderViewKt$Toolbar$finish$1$2.

User action naming

To construct user action names for Jetpack Compose UI components, OneAgent captures semantics information and evaluates the information from the merged semantics tree of the instrumented component.

Four properties are evaluated to generate proper user action names. When several properties are present, the property with the highest priority is used for a user action name.

When several properties of the same type are available, then the value of the first property is used for the user action name. Empty property values are ignored.

Simplified example with two properties of the same type

In the code snippet below, the button contains two Text properties; the values of both properties are available in the merged semantics tree. OneAgent will pick up the first one and generate the Touch on Title user action name.

Button(onClick = { ... }) {Text("Title")Text("Body")}

More complex example with two properties of the same type

In the code snippet below, the component contains two contentDescription properties; the values of both properties are available in the merged semantics tree. OneAgent will pick up the first one and generate the Touch on Finish your order user action name (not Touch on Finish).

var checked by rememberSaveable { mutableStateOf(false) }val likeIcon =if (checked) Icons.Filled.ThumbUpelse Icons.Outlined.ThumbUpIconToggleButton(checked = checked,onCheckedChange = { checked = it },modifier = Modifier.clip(CircleShape).background(if (checked) MaterialTheme.colors.primaryelse Color.Transparent).semantics {contentDescription = "Finish your order"}) {Icon(likeIcon, "Like", modifier = Modifier.semantics {contentDescription = "Finish"})}

Set custom user action name

If you want to set a custom user action name for a Jetpack Compose UI component, use the SemanticsPropertyReceiver.dtActionName property.

// This import is required to use our custom semantics propertyimport com.dynatrace.android.api.compose.dtActionNameBox(modifier = Modifier.clickable { ... }.semantics {dtActionName = "Finish your order"}) {...}

For the code snippet above, OneAgent will generate the Touch on Finish your order user action name.

The SemanticsPropertyReceiver.dtActionName property is part of the merged semantics tree, but it's ignored by accessibility services.

For a mobile custom action or a mobile autogenerated user action, the maximum name length is 250 characters.

If you want to mask personal information that appears in your application's user action names, enable user action masking.

Captured component metadata

When monitoring your mobile application, OneAgent for Android also captures additional metadata for the instrumented Jetpack Compose components. This metadata is stored as key-value pairs and is available in the Dynatrace web UI. It provides additional context for your instrumented components, for example, their location in code, initial and target states, user action types, and more.

Captured parameters and values by component

The following key-value pairs are reported depending on how the user interacted with the Jetpack Compose UI component.

Modifier.clickable

| Parameter | Description | Reported value |

|---|---|---|

function | Function name for the onClick parameter | |

role | Type of user interface element | null when role isn't provided |

type | User action type | click |

Modifier.combinedClickable

| Parameter | Description | Reported value |

|---|---|---|

function | Function name for the onClick, onLongClick, or onDoubleClick parameter | |

role | Type of user interface element | null when role isn't provided |

type | User action type | clickdouble clicklong click |

Modifier.toggleable

| Parameter | Description | Reported value |

|---|---|---|

function | Function name for the onValueChange parameter | |

role | Type of user interface element | null when role isn't provided |

fromState | Initial state of the UI component For example, if the сheckbox was selected and the user cleared it, On is reported as fromState. | |

type | User action type | toggle |

Modifier.swipeable

| Parameter | Description | Reported value |

|---|---|---|

state class | Class that is a state holder for the UI component, for example, SwipeableState | |

fromState1 | Initial state of the UI component For example, if the user swiped from A to B, A is reported as fromState. | |

toState1 | Target state of the UI component For example, if the user swiped from A to B, B is reported as toState. | |

type | User action type | swipe |

Not reported if user action masking is enabled.

Modifier.pullRefresh

| Parameter | Description | Reported value |

|---|---|---|

function | Class name for the onRefresh parameter (method rememberPullRefreshState) | |

type | User action type | pull refresh |

Slider and RangeSlider

| Parameter | Description | Reported value |

|---|---|---|

function | Function name for the onValueChange parameter | |

toState for Slider | Selected slider value, for example, 150 | |

toState for RangeSlider | Selected slider range, for example, 25..150 | |

type | User action type | slide |

HorizontalPager and VerticalPager

| Parameter | Description | Reported value |

|---|---|---|

orientation | Pager type | Horizontal or Vertical |

fromState | Initial page index For example, if the user swiped through a photo gallery from photo 1 to photo 2, 1 is reported as fromState. | |

toState | Target page index For example, if the user swiped through a photo gallery from photo 1 to photo 2, 2 is reported as toState. | |

type | User action type | pager |

View component metadata in Dynatrace

The metadata captured for Jetpack Compose UI components is available on the waterfall analysis pages of your app's user actions.

To view captured metadata for a Jetpack Compose component

- In Dynatrace, go to

Session Segmentation.

Session Segmentation. - Find and select a session that contains the required user action.

- Under Events and actions, expand the user action, and then select Perform waterfall analysis.

- Scroll down to the Reported values section to see the metadata captured for the Jetpack Compose UI component.

Web request monitoring

The Dynatrace Android Gradle plugin can automatically instrument and tag your web requests. To track web requests, OneAgent adds the x-dynatrace HTTP header with a unique value to the web request. This is required to correlate the server-side monitoring data to the corresponding mobile web request.

For HTTP(S) requests, you cannot combine automatic and manual web request instrumentation. However, you can use automatic instrumentation for HTTP(S) requests and manual instrumentation for non-HTTP(S) requests such as WebSocket or gRPC requests.

Configure web request monitoring

All web request monitoring related properties are part of WebRequest DSL, so configure these properties via the webRequests block.

dynatrace {configurations {sampleConfig {webRequests {// your web request monitoring configuration}}}}

If a web request is triggered shortly after a monitored user interaction, OneAgent adds the web request as a child event to the monitored user action. OneAgent automatically truncates the query from the captured URL and only reports the domain name and path of your web requests.

Disable web request monitoring

You can completely deactivate web request monitoring with the enabled property. In this case, all other properties are ignored, so only specify the enabled property to avoid confusion.

dynatrace {configurations {sampleConfig {webRequests.enabled false}}}

Web request monitoring sensors

The following HTTP frameworks are supported:

- HttpURLConnection

- OkHttp: Only version 3, 4, and 5

- Apache HTTP Client: Only the Android-internal HTTP Client version1

Android has deprecated the Apache HTTP client library (see Android 6.0 changes and Android 9.0 changes), so use a different HTTP framework. The new Apache HTTP Client version 5 is not supported. Old Apache HTTP Client versions are supported because they provide the same interface.

If your web request library is based on one of these supported frameworks, the internal classes of the library are automatically instrumented. For example, Retrofit version 2 is based on OkHttp, so all Retrofit web requests are automatically instrumented.

You can deactivate specific sensors via the WebRequest Sensor DSL properties and configure it inside the sensors block.

dynatrace {configurations {sampleConfig {webRequests {sensors {// fine-tune the sensors if necessary}}}}}

Lifecycle monitoring

To track lifecycle events, we use the official Android ActivityLifecycleCallbacks interface. For activities, Dynatrace reports the time of each entered lifecycle state until the activity is visible; if available, the timestamps of lifecycle callbacks are displayed in the user action waterfall analysis and are marked as a Lifecycle event.

Reported lifecycle events

With lifecycle monitoring, OneAgent collects data on the following lifecycle events.

-

Application start event (

AppStart): Measures the time required to start an application and display the first activity.The application start event is not captured when the automatic OneAgent startup is disabled or when the application starts up in the background and does not promptly open an activity.

- Activity display: Measures the time required to display an activity.

- Activity redisplay: Measures the time required to redisplay a previously created activity. Two options are possible:

- Option 1: An activity is in the Stopped mode and is not visible on the screen, and then it's Started and Resumed again.

- Option 2: An activity is in the Paused mode and is not fully visible on the screen but partially obfuscated, and then it's Resumed again.

The timespan used for measuring the lifecycle event duration depends on the lifecycle event type and the level of Android API. When Android API level 29+ is used, we can measure the duration of lifecycle events more accurately thanks to pre- and post-lifecycle callbacks.

| Lifecycle event | Android API 29+ | Android API 28 and earlier | Reported lifecycle callbacks |

|---|---|---|---|

| Application start event | Application.onCreate() – onActivityPostResumed of the first activity | Application.onCreate() – onActivityResumed of the first activity | No callbacks reported |

| Activity display | onActivityPreCreated – onActivityPostResumed | onActivityCreated – onActivityResumed | onCreateonStartonResume |

| Activity redisplay, option 1 | onActivityPreStarted – onActivityPostResumed | onActivityStarted – onActivityResumed | onStartonResume |

| Activity redisplay, option 2 | onActivityPreResumed – onActivityPostResumed | Not possible to measure the duration | onResume |

Configure lifecycle monitoring

Lifecycle events are either part of existing user actions, or they create a new user action and attach the display or redisplay lifecycle action to it.

All lifecycle monitoring related properties are part of Lifecycle DSL, so configure them via the lifecycle block.

dynatrace {configurations {sampleConfig {lifecycle {// your lifecycle monitoring configuration}}}}

Disable lifecycle monitoring

You can deactivate lifecycle monitoring with the enabled property. In this case, all other properties are ignored, so specify only the enabled property.

dynatrace {configurations {sampleConfig {lifecycle.enabled false}}}

Lifecycle monitoring sensors

You can deactivate specific sensors via the Lifecycle Sensor DSL properties and configure it inside the sensors block.

dynatrace {configurations {sampleConfig {lifecycle {sensors {// fine-tune the sensors if necessary}}}}}

Crash reporting

OneAgent captures all uncaught exceptions. The crash report includes the occurrence time and the full stack trace of the exception.

In general, the crash details are sent immediately after the crash, so the user doesn’t have to relaunch the application. However, in some cases, the application should be reopened within 10 minutes so that the crash report is sent. Note that Dynatrace doesn't send crash reports that are older than 10 minutes (as such reports can no longer be correlated on the Dynatrace Cluster).

You can deactivate crash reporting with the crashReporting property.

dynatrace {configurations {sampleConfig {crashReporting false}}}

Rage tap detection

Dynatrace Android Gradle plugin version 8.231+

When your mobile app doesn't respond quickly, a text label looks like a button, or a toggle is hidden under another toggle, users might repeatedly tap the screen or the affected UI control in frustration. OneAgent detects such behavior as a rage tap.

OneAgent can monitor only touch screen events that are handled by an Activity component. OneAgent cannot monitor Android UI components that have their own touch screen processing logic, for example, Dialog and DreamService.

You can deactivate rage tap detection using the detectRageTaps property of the BehavioralEvents DSL.

dynatrace {configurations {sampleConfig {behavioralEvents {detectRageTaps false}}}}

Location monitoring

When enabled, OneAgent appends the captured end user positions to the monitoring data. To protect the privacy of the end user, OneAgent captures GPS coordinates with a precision of two decimal places (~1 km accuracy).

You can activate the location monitoring feature with the locationMonitoring property.

dynatrace {configurations {sampleConfig {locationMonitoring true}}}

OneAgent only captures location data that is already processed in your application. OneAgent doesn't request additional location data from the Android SDK. If your app doesn't process location data, this feature isn't enabled. When location monitoring is disabled or no location information is available, Dynatrace uses IP addresses to determine the location of the user.

The plugin supports the following location listener:

android.location.LocationListener

Mobile

Mobile