Azure OpenAI

- Latest Dynatrace

- How-to guide

- 2-min read

- Published Mar 25, 2024

Dynatrace version 1.272+Environment ActiveGate version 1.195+

Dynatrace ingests metrics from Azure Metrics API for OpenAI. You can view metrics for each service instance, split metrics into multiple dimensions, and create custom charts that you can pin to your dashboards.

Enable monitoring

To learn how to enable service monitoring, see Enable service monitoring.

View service metrics

You can view the service metrics in your Dynatrace environment either on the custom device overview page or on your Dashboards page.

View metrics on the custom device overview page

To access the custom device overview page

- Go to

Technologies & Processes Classic.

Technologies & Processes Classic. - Filter by service name and select the relevant custom device group.

- Once you select the custom device group, you're on the custom device group overview page.

- The custom device group overview page lists all instances (custom devices) belonging to the group. Select an instance to view the custom device overview page.

View metrics on your dashboard

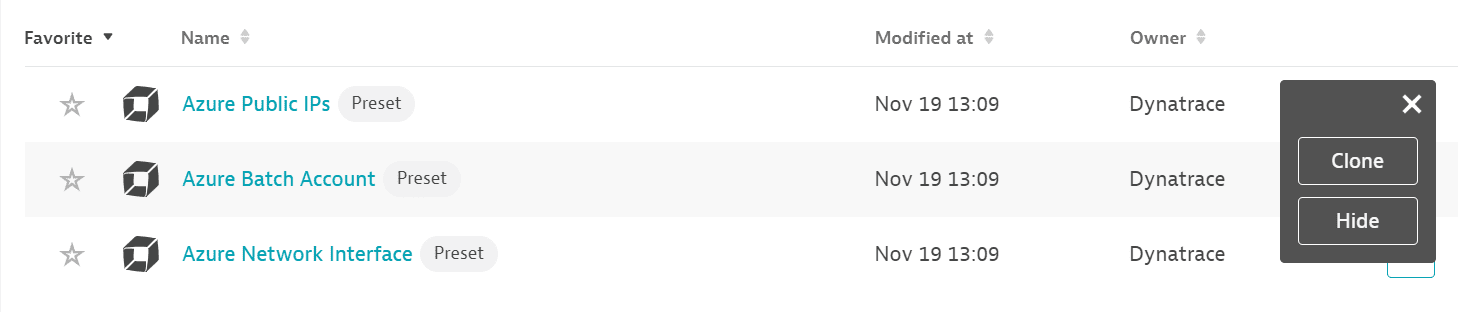

If the service has a preset dashboard, you'll get a preset dashboard for the respective service containing all recommended metrics on your Dashboards page. You can look for specific dashboards by filtering by Preset and then by Name.

For existing monitored services, you might need to resave your credentials for the preset dashboard to appear on the Dashboards page. To resave your credentials, go to Settings > Cloud and virtualization > Azure, select the desired Azure instance, then select Save.

You can't make changes on a preset dashboard directly, but you can clone and edit it. To clone a dashboard, open the browse menu (…) and select Clone.

To remove a dashboard from the dashboards list, you can hide it. To hide a dashboard, open the browse menu (…) and select Hide.

Hiding a dashboard doesn't affect other users.

Available metrics

| Name | Description | Dimensions | Unit | Recommended |

|---|---|---|---|---|

| SuccessRate | Availability rate | API name, Operation name, Ratelimit key, Region | Percent | |

| BlockedCalls | Blocked calls | API name, Operation name, Ratelimit key, Region | Count | |

| ClientErrors | Client errors | API name, Operation name, Ratelimit key, Region | Count | |

| DataIn | Data in | API name, Operation name, Region | Byte | |

| DataOut | Data out | API name, Operation name, Region | Byte | |

| GeneratedTokens | Number of generated completion tokens | API name, Model deployment name, Model name, Region, Usage channel | Count | |

| Latency | Latency | API name, Operation name, Ratelimit key, Region | MilliSecond | |

| FineTunedTrainingHours | Processed fine tuned training hours | API name, Model deployment name, Model name, Region, Usage channel | Count | |

| TokenTransaction | Processed inference tokens | API name, Model deployment name, Model name, Region, Usage channel | Count | |

| ProcessedPromptTokens | Processed prompt tokens | API name, Model deployment name, Model name, Region, Usage channel | Count | |

| Ratelimit | Ratelimit | Ratelimit key, Region | Count | |

| ServerErrors | Number of server errors | API name, Operation name, Ratelimit key, Region | Count | |

| SuccessfulCalls | Number of successful calls | API name, Operation name, Ratelimit key, Region | Count | |

| TotalCalls | Number of calls | API name, Operation name, Ratelimit key, Region | Count | |

| TotalErrors | Number of errors | API name, Operation name, Ratelimit key, Region | Count |